S3 Storage For DevOps Backups

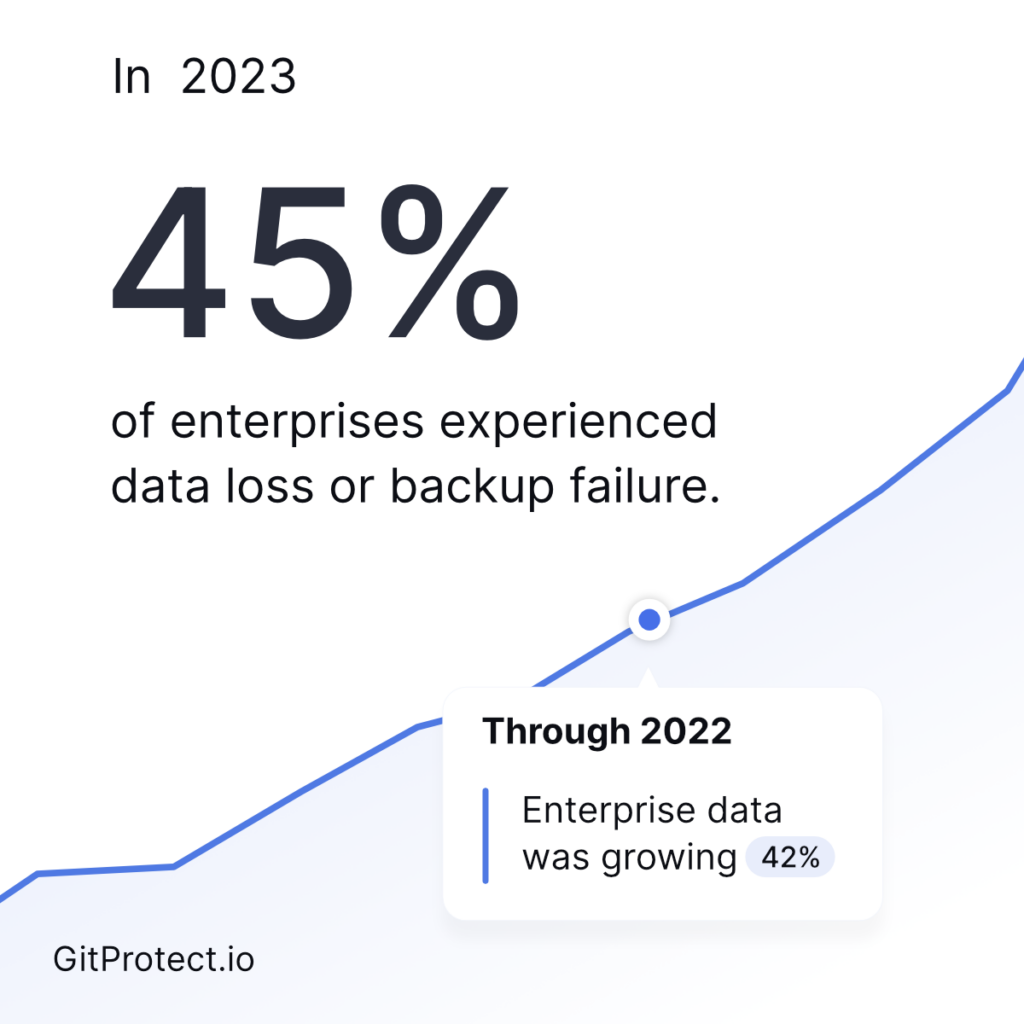

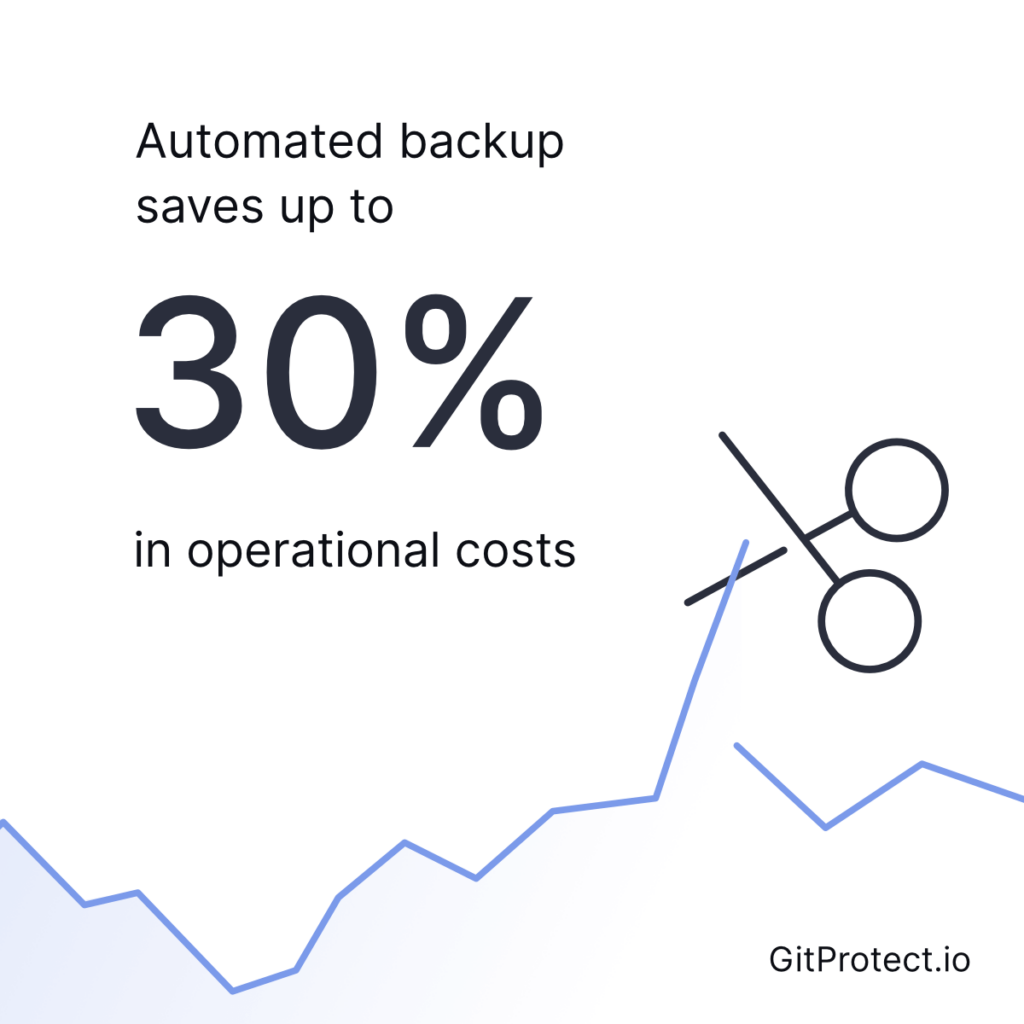

Choosing S3 storage like AWS, Google Cloud, or Azure Blob Storage is a strategic choice. Especially as data volumes grow fast and disaster recovery strategies require more focus. Such an investment may reduce operational overhead and optimize costs. Then, new technical and economic perspectives follow.

99% of IT decision-makers state they have a backup strategy. And yet, 26% of them couldn’t fully restore their data when recovering from backups (according to a survey of Apricorn from 2022).

That means backing up your GitLab., GitHub, Bitbucket, Azure DevOps repositories, or Jira instances based more diversely is vital for data protection and continuity. Even though it may sound like a prominent buzzword.

Responsibility models

Another crucial factor is the Shared Responsibility Model (SRM) under which public repository providers operate. SaaS firms generally cover the cloud infrastructure, but data protection at the account level has some limitations. In other words, you create and manage any file in the repository on your own.

It’s just as you can read in the subscription agreement (e.g., GitLab):

Customer is responsible for implementing and maintaining privacy protections and security measures for components that Customer provides and controls. And that’s what you call a crucial reason for backup vendor diversification.

What is an S3?

As you may know, S3 is an individual object (files) storage system. It keeps and handles large amounts of unstructured data. Instead of storing it in the typical hierarchical file structure (file system), all information is stored as objects in a bucket.

Each object consists of the actual data and metadata (e.g., pull requests) describing a given resource:

- documents or any file (including binary)

- images

- videos

- backups

- stats to analyze data, and many more.

Amazon designed cloud S3 (Simple Storage Service) as a particular object storage implementation and launched it on March 14, 2006. Among the key capabilities of S3, you’ll find:

- Buckets

The bucket is a container that can hold unlimited objects, each up to 5 TB in size. You can perform various operations within the same bucket, from upload and integration to versioning and more. Each bucket name is a unique key and DNS-compliant. - Scalability

With a virtually limitless storage capacity, S3 helps companies scale their storage to actual and future needs. - Data access

Customers connect S3 objects in the cloud via AWS Management Console or APIs (AWS SDK or S3 REST API). Both support interactions with stored data through a web interface or command line. - Availability (durability)

S3 (Amazon) provides a 99.99999999% durability rate. It is possible due to the automatic cross-region replication of many availability zones.

For standard storage classes, the availability is guaranteed at 99.99%.

DID YOU KNOW?

✅ 99.99999999% durability rate means for every 10 billion objects stored, only 1 might be lost in a given year,

✅ 99.99% availability suggests that accessing the data you may experience up to 52 minutes of downtime per year.

5 most recognized S3 providers (cloud native applications) worldwide

Among the most recognized and reliable S3-compliant storages you can consider are:

Amazon Web Services (AWS S3) – Amazon S3

Amazon S3 is the original S3 service, offering highly scalable and durable features. The solution integrates smoothly with the AWS ecosystem. For enterprises and startups, it’s the go-to choice, with comprehensive security features.

Google Cloud Storage (GCS)

GCS is an S3-compatible (not S3 in a sense) platform with strong integration across its platform. It provides flexible pricing tiers and is ideal for data analytics, machine learning, and high-performance apps requiring easy access to Google Cloud tools.

Microsoft Azure Blob Storage (MABS)

Azure’s S3-compatible backup solution is part of a larger, integrated cloud ecosystem. Azure Blob offers compatibility with Microsoft stack (well, that’s where GitHub backups come from). It’s ideal for each enterprise using Azure (or wants to deploy and configure it) for other services and applications.

Backblaze B2

One of the most cost-effective S3 options out there. B2 is known for its affordability, providing a solution with no egress fees. Backblaze is popular among firms needing inexpensive long-term storage without sacrificing durability.

Wasabi

This is another example of an S3-compliant option at a lower cost than major providers and with no egress or API request fees. Companies seeking pricing for high-volume storage should consider the Wasabi option to upload and secure their backups.

All the above S3s are public cloud services that offer various options for integrating with the local infrastructure (on-premises) – hybrid cloud, etc.

| Capacity | AWS S3 | Google Cloud | Azure Blob | Wasabi | Backblaze B2 |

|---|---|---|---|---|---|

| Storage Classess Tiers | Standard Intelligent tiering One-zone IA Glacier Glacier Deep Archive | Standard Nearline Coldline Archive | Hot Cold Archive | Standard Archive | Standard Archive |

| Data durability | 99,999999999% | 99,999999999% | 99,999999999% | 99,999999999% | 99,999999999% |

| Data availability | 99,99% | 99,99% | 99,99% | 99,99% | 99,99% |

| Global distribution | ☑️ | ☑️ | ☑️ | ❌ | ❌ |

| Access control | IAM, Bucket Policies, ACL | IAM, Bucket Policies | IAM, Bucket Policies, ACL | IAM, Bucket Policies | IAM, Bucket Policies |

| Versioning | ☑️ | ☑️ | ☑️ | ❌ | ☑️ |

| Lifecycle management | ☑️ | ☑️ | ☑️ | ❌ | ☑️ |

| Event notifications | ☑️ | ☑️ | ☑️ | ❌ | ❌ |

| Encryption | Server-side: – SSE-S2 – SSE-KMS – SSE-C Client-side | Server-side: – Google-managed – Customer-managed | Server-side: – Azure-managed – Customer-managed | Server-side (default) | Server-side (default) |

| API/SDK | AWS SDK REST API | Google Cloud SDK REST API | AZURE SDK REST API | S3-compatible API, SDK | S3-compatible API, SDK |

| Pricing | Pay-as-you-go tiered pricing | Pay-as-you-go tiered pricing | Pay-as-you-go tiered pricing | Flat-rate pricing | Pay-as-you-go tiered pricing |

| Free tier | 5 GB of storage 20k GET requests 2k PUT requests/month | 5 GB of storage 1 GB of egress | 5 GB of storage 20k reads 20k writes/month | 1 GB of storage 1 GB of egress/month | 10 GB of storage 1 GB of egress/month |

Key S3 backup security features

Well, we’ve already proved that S3 storage providers are eager to ensure the security of their service. However, when you’re choosing your cloud storage for your backups, it’s better to take into account the following S3 security features features:

Encryption at rest

In terms of server-side encryption, S3 offers the SSE-S3 option. It encrypts data in the bucket with 256-bit AES, managed by AWS. So, each object is encrypted using a unique key, while the key itself is also protected through a master key.

Another server-side approach involves the SSE-KMS solutions. AWS Key Management Service (KMS) allows users to manage encryption keys. It guarantees more key rotation control and IAM policy permissions.

Client-side encryption

With such an encryption mechanism, customers can secure each backup file before they archive it in a S3 bucket. The encryption key they create doesn’t leave the user’s environment, so AWS can’t see it.

Encryption in transit

S3 supports HTTPS (SSL/TLS) to protect transmitted data. The main goal is to prevent interception and man-in-the-middle (MTM) attacks when customers connect with S3 bucket.

Access control practices

Storing the encrypted data within S3 objects is bound to two (or more) types of policies: Bucket policies and Access Control Lists (ACLs).

As the name suggests, bucket policies are defined at the bucket level. They utilize JSON-based rules to manage control.

With these policies, you can define who (IAM role, user, account) can interact with specific objects and in what way (read/write/list/delete).

A typical policy to configure and connect with:{

"Version": "2024-09-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {"AWS":"arn:aws:iam::123456789012:user YourUser"},

"Action": "s3:GetObject",

"Resource":"arn:aws:s3:::example-bucket/*"

}

]

}ACLs present another approach to controlling object-level permissions. They allow you to define read/write privileges for users or groups. You can also combine such lists with policies to create fine-grained admission (file).

Identity and Access Management (IAM) integration

The IAM integration is used to keep control over who can access S3 services and resources.

This way, you can assign an IAM role to a user for temporary and restricted access to particular actions, such as Amazon S3. Apps or services can assume these roles by interacting with S3 on the user’s behalf.

When you create IAM policies, you define JSON-based policy documents. These files clarify what S3 actions – e.g., GetObject, PutObject, or ListBucket – a user or role will be allowed to perform on a specific object or bucket.

A simple policy code:

{

"Version": "2024-09-17","Statement": [

{

"Effect": "Allow",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::example-bucket/*"

}

]

}Audit logging (S3 Access Logs/AWS CloudTrail)

Audit logging provides an essential tool for tracking and monitoring enhanced security and compliance measures.

S3 Access Logs capture all requests addressed to a bucket, including the request author, time, and location. That allows for auditing access and possible security incidents.

On the other hand, AWS CloudTrail keeps all API calls sent to AWS services, including S3. The logs uncover who created or deleted an object, improving the compliance and security analysis.

Cross-Origin Resource Sharing (CORS)

S3 supports CORS policies to improve control capabilities related to access from various domains.

Access-Control-Allow-Origin: https://gitprotect.io

Access-Control-Allow-Methods: GET, POST, PUT, DELETE

Access-Control-Allow-Headers: Content-Type, Authorization

Access-Control-Allow-Credentials: trueCORS headers allow you to manage whether an external web application can access given objects in S3 bucket. The solution increases the security of web-based tools interacting with your repository.

Multi-Factor Authentication (MFA) Delete

MFA Delete offers an additional security barrier. The latter requires MFA for strictly defined operations, like permanently deleting objects or versioning.

By doing so, you can secure your backup files from accidental deletions and malicious data removal.

Restricted public access (data protection)

Another S3 security capability can block access at the bucket and account levels. It prevents any user from unintentionally exposing sensitive data.

The feature includes disabling ACLs that make objects public and blocking public access settings unless explicitly overridden.

S3 storage for DevOps backups. For whom?

Looking at the S3 features, it’s clear that companies that handle large amounts of critical data, especially those in highly regulated or data-heavy industries, will benefit the most from using S3 for backups.

Among them are:

Financial institutions that process and analyze data

Banks and other financial organizations deal (and create) with massive volumes of sensitive customer data. The process must meet strict compliance requirements like GDPR, HIPAA, SOC 2, PCI-DSS, NIS 2, or DORA.

For such organizations high durability and strong security features – including encryption, IAM controls, and audit logs – is the best match for their platform.

The ability to store vast amounts of data with flexible cost models, such as S3 Glacier for cold storage, makes it ideal for long-term data retention and regulatory audits.

Healthcare and pharmaceutical

Healthcare faces similar challenges. Every entity manages patient records, clinical trials, and research data in its environment. The latter must support secure data backups and be compliant with standards like HIPAA.

S3 bucket encryption options (both at rest and in transit) – combined with versioning and audit logging – help maintain data integrity and compliance.

In healthcare, fast recovery (low RTO/RPO) of a file or database is critical for patient care continuity. That makes S3’s availability a vital option.

Tech and DevOps firms

Each fast-growing tech enterprise values scalable storage to keep pace with its expanding data needs. The integration service available in the S3 bucket allows to configure automated backups that can scale as the business grows.

This way, they ensure repositories, artifacts, and CI/CD pipelines (counted in dozens of terabytes) are safely stored without manual intervention.

Startups benefit from flexible pricing based on usage. It reduces initial costs while still offering reliability. So, startups connect more with recognized storage classes with predictable pricing.

DID YOU KNOW?

✅ Using the GitProtect.io backup and restore solution allows you to achieve the 10-minute Recovery Time Objective (RTO) working with S3.

Media and entertainment

In the media and entertainment industry, files can be massive:

- videos

- graphics

- sounds

- documentation (e.g., in GitHub repository)

- source code

- application data

- database

- service configuration

- backups (e.g., GitHub backups).

Entertainment entities, like game developers, require high-performance computing to analyze data while operating with specific parameters, settings, and code. Such firms rely on cloud infrastructure and on-premises.

Given the high market value of each project in the media and entertainment industry, a reliable S3 provider, with its unique features tailored for large file management and backup, is a valuable asset in any venture.

That requires the ability to deploy efficient backup systems, including fast interaction with the archive and version control. For projects with a quickly growing number of terabytes or even exabytes (!) in size, S3’s scalability is essential.

S3 storage classes support high-volume, high-throughput demands while enabling fast upload and retrieval of backups. That’s especially important in post-production management, where every time-sensitive project requires quick interaction with an archived data file.

Enterprises with remote and hybrid workforce

Large organizations with distributed teams pay close attention to any centralized, scalable backup platform as they manage vast amounts of sensitive (and other) data.

Unsurprisingly, the S3 bucket offers a single, globally accessible storage with granular access controls. It ensures teams can keep and retrieve data securely from any location. The backup process (e.g., of the GitHub repository) is simplified, reducing the complexity of managing backups across multiple sites.

Cloud-native firms and SaaS providers

Companies operating on online platforms are willing to pay for a cloud service that supports seamless growth and integration with CI/CD pipelines. With that, efficient management of frequent backups follows.

A tiered S3 plan helps optimize expenses, maintain high availability, and support the enterprise’s overall resilience.

In addition, cloud services strongly rely on security and compliance. To protect and archive data, they require exceptionally strong encryption and audit capabilities. For that, they expect S3’s high durability and cross-region replication from each S3 bucket to protect information at the needed level.

This way, quick recovery operations can adhere to SOC 2, GDPR, and other strict industry regulations at a reasonable cost.

All aforementioned cases involve at least a 3-2-1 or 3-2-1-1-0 rule for backups. It’s much easier to manage them with a multi-storage system like GitProtect.io, which provides GitProtect Cloud Storage for free, regardless of the license.

Summary

S3-compliant repositories may be the right choice for businesses managing large volumes of critical and sensitive data. Firms operating in highly regulated, data-intensive industries view S3 backup as necessary for their environments.

A storage solution like an S3 bucket offers strong data protection and encryption, as well as cross-region replication settings. This improves businesses’ compliance and supports their continuity, thus increasing their competitiveness.

Moreover, S3 is an answer for increasing backup strategy needs. A service becomes a key tool for reducing risk and optimizing costs. S3s create a strategic choice, allowing any modern enterprise to maintain high availability and pay less.

[FREE TRIAL] Ensure compliant DevOps backup and recovery with a 14-day trial 🚀

[CUSTOM DEMO] Let’s talk about how backup & DR software for DevOps can help you mitigate the risks