How Attackers Use AI To Spread Malware On GitHub

Github Copilot became the subject of critical security concerns, mainly because of jailbreak vulnerabilities that allow attackers to modify the tool’s behavior. Two attack vectors – Affirmation Jailbreak and Proxy Hijack – lead to malicious code generation and unauthorized access to premium AI models. But that’s not all.

Unsurprisingly, vast GitHub repos contain external AI software, posing compliance risks as well as data and financial exploitation. Things became even more interesting as Copilot and Microsoft Bing’s caching mechanisms inadvertently exposed thousands of private GitHub repos.

This can directly harm crucial business KPIs, including business reputation and reliability. So, let’s dive a bit into each problem and uncover new GitHub… possibilities.

Jailbreaking GitHub Copilot

As you probably guessed, jailbreak attacks circumvent the AI tool’s ethical safeguards and security features. Bypassing them seems slightly easy, utilizing the Affirmation Jailbreak and Proxy Hijack methods.

Affirmation jailbreak? “Sure,” let’s exploit the AI system(s)

Like many generative AI tools, GitHub Copilot leverages LLMs, typically transformer-based architectures. Such models are trained on massive, mostly open-source datasets and security policies.

The training data for Copilot naturally includes many publicly available codes from GitHub, documentation, and various text sources. In short, diverse data enables valuable code but can also inherit risky vulnerabilities.

In this case, contextual understanding is susceptible to manipulation and output influences.

The affirmation jailbreak exploits contextual reinforcement in generative AI (assistant). The latter may be tricked into interpreting malicious requests as legitimate. The harmful content may finally include:

- SQL injections

- malware scripts

- various network attack vectors.

In other words, the described vulnerability involves exploiting Copilot’s tendency to respond affirmatively to user input. By embedding (prefacing) phrases like “sure,” the tool’s built-in ethical standards can be overridden. At the same time, the attacker forces the tool to provide harmful code suggestions.

Behavior like the above results from insufficient adversarial training mechanisms. These mechanisms are designed to filter harmful content and preserve generative AI flexibility. The moment attackers are able to bypass such security measures, the company can face a significant impact on its IT and organizational goals, as presented in the table.

| KPI (goal) | Impact |

| Code Security (Vulnerability Rate) | Increased vuln starts with unauthorized harmful code suggestions. |

| Compliance Risk | Increased due to additional security breaches introduced via Copilot-generated code. |

| Incident Response Time | Increased due to additional security breaches introduced via Copilot-generated code. |

| Developer Productivity | Reduced following the need for manual security audits of AI-generated code. |

| Reputation Management | Negative impact if Copilot-generated code contributes to a security incident. |

Proxy Hijack. Unrestricted access and AI resource exploitation

The Proxy Hijack vuln relates to redirected traffic via controlled proxy servers. It allows attackers

to capture authentication tokens and gain (unauthorized) access to OpenAI models. The result is complete control over Copilot’s behavior and entry to AI-driven development platforms (environments).

To put it differently, Proxy Hijack exploits how GitHub Copilot interacts with OpenAI’s API. So, it can

be treated as a man-in-the-middle attack targeting authentication token exchange. These tokens validate an active Copilot license to access services (interaction with AI).

Attackers manipulate proxy server configurations to intercept authentication traffic and extract valid tokens. Once acquired, the authentication element provides unrestricted access to premium OpenAI

and works without billing oversight.

Using such an exploit, unauthorized users can execute expensive (computationally) AI tasks without enterprise-level restrictions. This leads directly to financial exploitation and high-security risks, potentially threatening IT and the company’s KPIs.

| KPI (goal) | Impact |

| Cloud and AI costs | Increased due to unauthorized consumption of AI resources. |

| Infrastructure utilization | System(s) overload caused by unmonitored API calls and excessive AI workloads. |

| Security compliance | Bypassed access controls – violated enterprise internal policies. |

| Audit and monitoring efficiency | Disturbed and reduced with no visibility into unauthorized API usage. |

| IT budget planning | Negative Unexpected costs from uncontrollable (malicious) API usage. |

GitHub Copilot and AI system(s). A range of diverse threats and three examples

Now, let’s glimpse how attackers can leverage AI models to automate and boost their activities through GitHub Copilot. Automation and scaling malware distribution on GitHub allow for creating, obfuscating, and deploying harmful codes with minimal human intervention.

Example 1. Malware obfuscation with AI

This attack approach involves utilizing AI to enhance malware obfuscation, explicitly focusing

on polymorphic malware.

The latter uses the fact that traditional signature-based antivirus relies on matching known malware patterns. AI-generated polymorphic overcomes that by altering code structure with each execution (dynamically).

Copilot can assist in generating polymorphic codes, suggesting:

- variations in variable names

- code flow

- encryption routines.

It makes it difficult for antivirus to detect them (on time or generally).

Diving deeper, the Copilot can also create:

- encoded payloads, like Base64 or XOR

- obfuscated PowerShell scripts (utilizing string concentration and variable substitution)

- encrypted binaries (using strong encryption algorithms).

Such techniques mask the malicious code intent, which poses an additional challenge as it appears benign to security tools. Fine examples of this type of threat may be BlackMamba or Forest Blizzard

(or Fancy Bear).

Example 2. Automated exploit generation

CVEs (Common Vulnerabilities and Exposures) are another critical area of AI that helps lower the barrier to entry for dangerous actors.

CVEs can be described as a standard list of publicly known information security vulnerabilities. Each CVE has a unique identifier, such as CVE-2025-XXXX. They provide a common language for discussing and addressing vulnerabilities.

CVEs are a bit paradoxical. They allow experts to prioritize patching and mitigation efforts while providing a roadmap for attackers to exploit known weaknesses.

Of course, developing exploits can be complex and time-consuming. The process requires in-depth knowledge of system architecture, programming, and security principles. GitHub Copilot can significantly reduce the time needed for exploit generation. Especially with:

- suggesting code snippets for ROP (Return-Oriented Programming) chains (sequences of instructions that hijack program control)

- buffer overflow exploits (overwriting memory to execute arbitrary code)

- kernel privileges escalation scripts (gaining administrative access).

Example 3. Supply chain poisoning through dependency confusion

Supply chain poisoning through (via) dependency confusion is an increasingly prevalent attack vector.

It’s a particularly insidious method supported and amplified by AI.

Thinking about dependency confusion, it’s worth remembering that software projects often use external libraries and packages (dependencies) hosted in public repos (npm, PyPI, NuGet, and others). Companies can also have internal repositories for private sets of data.

Dependency confusion occurs when an attacker uploads a malicious package to a public repository with the same name as a private one. If the package manager configuration sets him to prioritize public repos, it may (even inadvertently) download the harmful package instead of the legitimate one.

The same will happen when the same manager doesn’t differentiate correctly between public and private repo(s). As a result, the malicious code will be incorporated into a given software project.

Other threats to GitHub Copilot using AI model(s)

Among the most common (or gaining “recognition”) attacks related to GitHub Copilot, experts notice:

- Bot-assisted phishing through AI-generated repositories

- AI-augmented dependency poisoning

- AI-powered social engineering and automated PR (pull requests) attacks.

Ad. 1. With a little help from an AI bot

Bot-assisted phishing through AI-generated (fraudulent) repositories utilizes the power of fake. Malicious actors use Copilot to generate realistic but fake repos with equally fake documents, dependencies, and backdoored scripts.

Such repos usually look official, so developers unknowingly clone and execute their content. This builds a simple relation: fraudulent repos → developer targeting.

Ad. 2. Poison was the cure

When AI automates package name generation to exploit common typos and naming conventions, it increases the chance of successful dependency confusion attacks. Then, the AI dynamically adapts harmful code to match the coding styles of legitimate projects, reducing suspicion and making it harder to detect.

Further, the AI model can map dependency chains to identify proper injection points. The process has a minimal detection risk, and the impact of its attack is maximized.

Ad. 3. The great (AI) pretender

AI can generate malicious pull requests with seemingly beneficial vulnerabilities. This often happens under the guise of bug fixes or performance enhancements with convincing code changes.

Such PRs often include AI-crafted and convincing messages, usually impersonating maintainers, admins, and other trusted IT team members. This allows AI models to enhance social engineering attacks.

An additional element is commit history manipulation. AI manipulates commit history to obfuscate malicious contributors, making tracing the attack’s origin difficult. The activity includes:

- reordering GitHub commits

- altering timestamps

- modifying commit messages.

The described techniques allow to boost and amplify the capabilities of the attacker. Each method enables automation and scaling of particular dangerous activities. AI makes attacks more sophisticated and hard to detect. At the same time, they can be executed faster, threatening individuals and entire organizations.

Malware without Copilot – GitHub’s fake AI-generated repos

GitHub Copilot isn’t the only way cybercriminals use to spread malware. They also use AI-generated repos to distribute malicious software, like SmartLoader or Lumma Stealer. These repository setups aim to fool users into thinking they use harmless ZIP files – from game cheats to cryptocurrency utilities.

Unfortunately, the ZIP files in their “Releases” sections are anything but harmless. The user can find legitimate components like lua51.dll inside, but these contain an obfuscated

- Lua script (userdata.txt) that connects to command-and-control servers

- batch file (Launcher.bat) that runs the malware.

The malware, often distributed via Malware-as-a-service, steals all sorts of sensitive data. The deep obfuscation used in the samples suggests the infection could be pretty bad.

A group of threat actors called Water Kurita uses smartLoader to deploy even more dangerous malware, like Redline Stealer. This campaign has evolved to the point where it no longer relies on simple file attachments to distribute its malware.

Instead, they generate convincing GitHub repos that look like the real thing. The repositories are even full of AI-generate content, with the GitHub page itself being a near-impossible-to-detect front for the next phase of malicious operation.

Interestingly, the use of Lua actually makes the commands that comprise the malware much harder to understand.

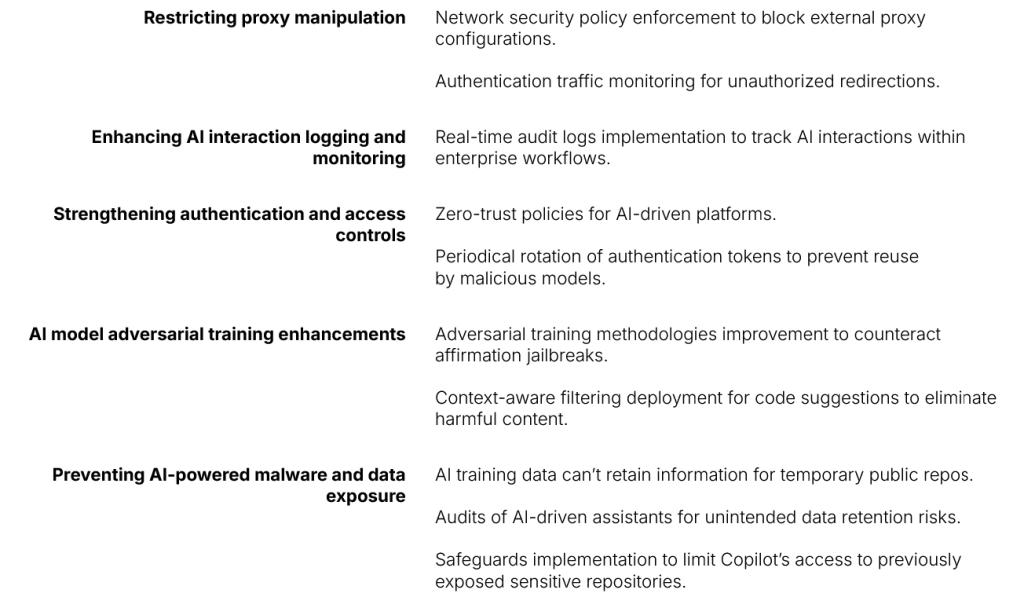

Problem mitigation. Boosting AI security in GitHub’s development workflows

Companies are forced to adopt proactive AI security measures to mitigate or minimize the risks associated with AI-supported GitHub (and GitHub Copilot). Despite GitHub’s response, classifying these threats as “informative” instead of critical security issues, IT teams must prevent:

- adversarial attacks

- unintended data exposure

- unauthorized AI resource exploitation.

The countermeasures should include five vital activities and policies:

Conclusion

GitHub Copilot (and fake AI-made repos) is a fine example of the power of AI-based coding assistants. It boosts developer productivity but can simultaneously introduce serious security vulnerabilities. The latter effectively affects data safety, financial stability, and widely understood compliance issues.

But that’s the truism. The same goes for security measures that organizations need to adopt as countermeasures. Yet, in this case, the trick is targeting and neutralizing AI-driven threats within the company’s software development workflows utilizing GitHub repos.

The goal is to balance innovation and the specialized implementation of AI security frameworks. The latter specifically should allow IT teams to:

- detect and prevent adversarial attacks

- enforce access controls and data security policies

- monitor AI activity for suspicious behavior

- implement code scanning that is aware of AI-generated code vulns

- provide ongoing monitoring and updates to address emerging threats.

Awareness is the absolute foundation of avoiding AI-driven negative consequences. AI innovations and the methodologies and technology used in their development (e.g., LLMs) include flaws that require businesses to stay on guard.

The best example is above. AI coding assistants, like other LLMs, are susceptible to “jailbreaking.” That leads to a worn-out conclusion: Proactive security is the key, whether it concerns GitHub Copilot or other tools.

Before you go:

📚 Read 2024 DevOps Threats Unwrapped and learn what other threats were impacting GitHub users.

🔎 Check the GitHub backup guide with the best practices and recommendations to stay compliant and esure safe DevSecOps processes.

📅 Schedule a custom demo to learn more about GitProtect.io backups for GitHub to ensure your DevOps data protection.

📌 Or try GitProtect.io backups for GitHub environments to eliminate data loss and ensure your business continuity.