Azure DevOps Pipelines 101: A Beginner’s Guide to CI/CD

In software engineering, the deployment process is not just about running a script and hoping it sticks. A big part of it is automation, not as a luxury, but a necessity. And that’s where Azure Pipelines steps in. The software provides a robust CI/CD engine embedded in the Azure DevOps ecosystem.

Developers and DevOps engineers working with version control systems, containers, or even legacy monoliths can leverage Azure Pipelines. It offers the structured scaffolding needed to build, test, and deliver code at scale, including support for major languages.

How to use it: Azure Pipelines

It all starts with the deceptive simplicity of Azure DevOps structure. In short, you can describe it as “define how your code should be built, tested, and deployed. Then, let the platform handle the sequence.”

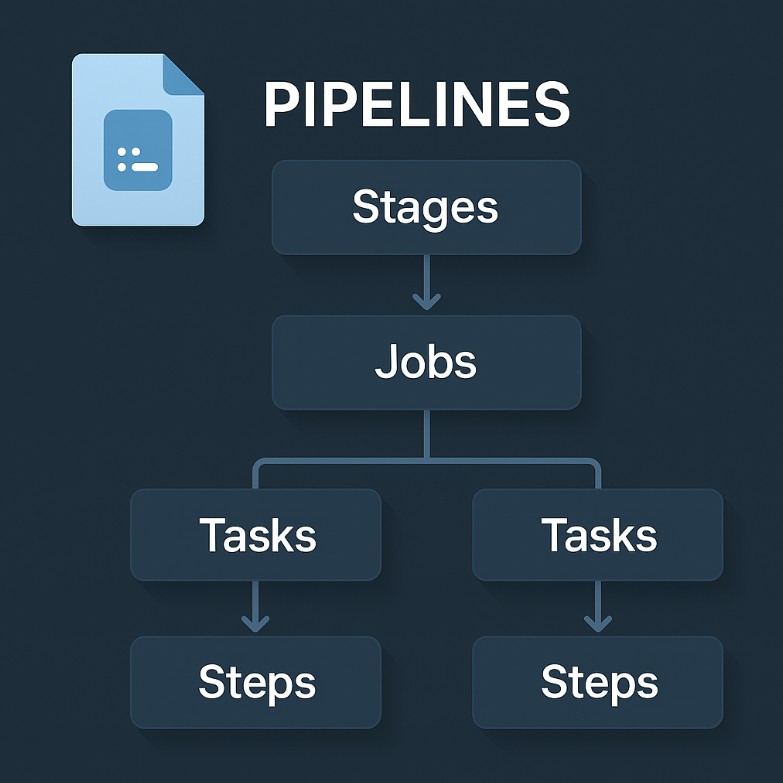

The ‘how’ resides in a YAML file. It becomes the blueprint for your pipeline run. But while syntax and indentation are essential, what truly matters in understanding the conceptual building blocks is:

- pipelines

- stages

- jobs

- tasks

- steps.

A pipeline in Azure DevOps isn’t a single process. It’s a hierarchy that consists of multiple stages. Each one contains one or more jobs. Every job can execute a series of tasks – either in sequence or in parallel jobs – depending on how you configure the agent pool and pipeline triggers.

Azure Pipelines

Azure Pipelines support a wide array of project types, programming languages, and deployment targets. The solution always scales to fit, whether you’re:

- pushing Java microservices to Azure Container Registry

- compiling an iOS app on self-hosted macOS agents

- publishing .NET artifacts.

Azure Pipelines’ hybrid architecture distinguishes it from other CI systems. It offers both Microsoft-hosted agents for ephemeral, on-demand builds and the option to use your own infrastructure via self-hosted agents. This allows you tighter control over security, dependencies, and runtime environments.

Another thing is virtual machines. They spin up quickly and run your build jobs. Then they vanish, leaving only pipeline artifacts behind. You can also orchestrate parallel jobs or multi-phased builds that span staging and production environments – an essential capability for continuous delivery pipelines.

Azure DevOps pipeline

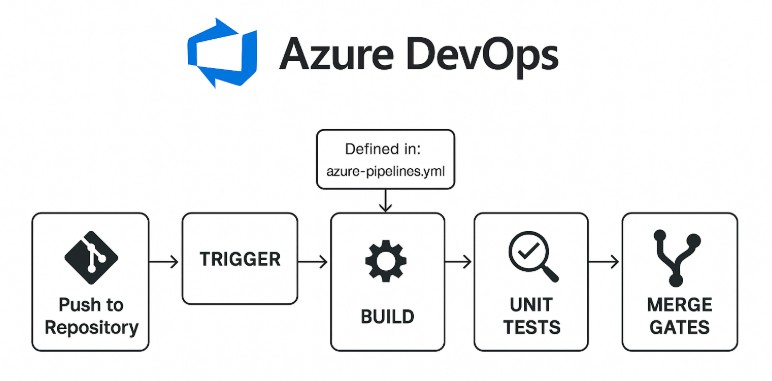

When creating a new pipeline in Azure DevOps, you begin by selecting a source from your version control repository. This could be GitHub, GitLab, Bitbucket, or Azure DevOps – any system that supports Git will do.

When the source is wired, you define the pipeline code using a YAML file typically stored at the root of your repository.

Such a YAML definition becomes the single source of truth for how your builds and deployments execute. The pipeline automatically builds the code, runs unit tests, packages the output into executable files, and ships the release to the target platform.

Continuous Integration

This topic may seem exploited, yet CI isn’t a buzzword. It’s a discipline. In Azure DevOps, continuous integration (CI) begins when code is pushed to the repository. Pipeline triggers – explicitly or inferred – initiate the build pipeline.

These pipelines can validate pull requests, enforce code quality rules, and block bad merges before they reach the mainline.

Running unit tests, performing test integration routines, and scanning for security flaws become routine checks rather than manual chores. Automation reduces the cognitive load on teams and reinforces engineering discipline.

Azure DevOps

It’s worth underlining that Azure DevOps is a complete lifecycle platform, not just a build server. It ties together

- code repositories

- work tracking

- testing infrastructure

- artifact storage.

Azure DevOps services integrate tightly with pipelines, enabling teams to trace every pipeline run back to a specific commit, pull request, or work item. The Azure DevOps organization URL becomes the entry point to your pipeline ecosystem. It defines the workspace under which you operate.

Within that, you can create multiple projects, assign role-based access control, and set up audit trails for every change and deployment.

Continuous Delivery

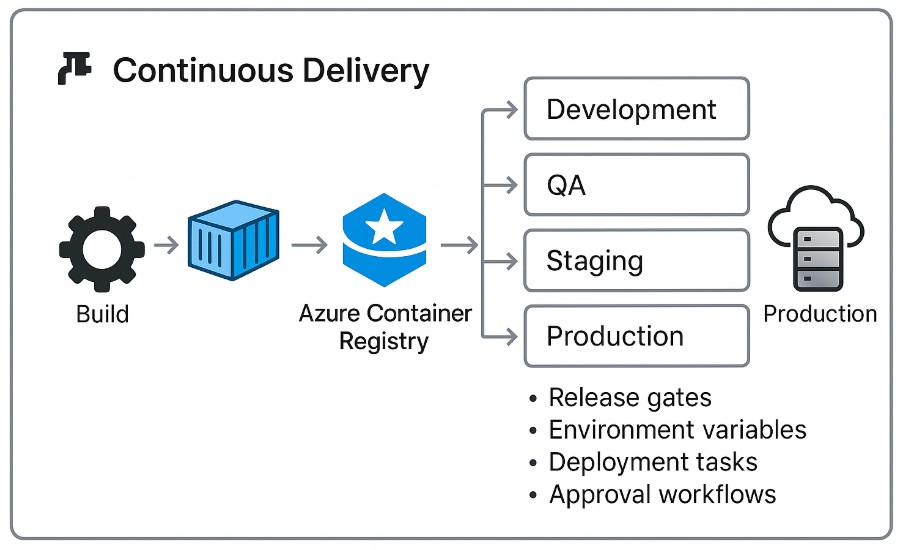

While continuous integration (CI) focuses on building and testing, continuous delivery extends the pipeline all the way to production. Azure Pipelines offers native container support, allowing you to create a release pipeline efficiently. It enables you to push images directly to Azure Container Registry and deploy containers with zero manual intervention.

Release pipelines manage the deployment process across various stages – development, QA, staging, and production. You can create a controlled and auditable release mechanism when you define:

- release gates

- environment variables

- deployment tasks

- approval workflows.

Build pipeline

Looking at the anatomy, a build pipeline includes:

- source checkout

- dependency resolution

- build compilation

- artifact packaging

- publishing.

Whether using a Maven pipeline template, a .NET Core build task, or scripting languages like Bash or PowerShell, the pipeline provides hooks at every step.

The output of a build pipeline is one more pipeline artifact. Release pipelines or other downstream jobs, then use these. You can configure pipeline settings to optimize execution and manage caching. You can also use secure secrets management to handle sensitive configuration data.

First pipeline, new pipeline run

From a beginner’s perspective, the first pipeline is both a milestone and a test of patience. You define the azure-pipelines.yml at the root of your project. A stripped-down example for a Node.js app may look like this:

trigger:

- main

pool:

vmImage: 'ubuntu-latest'

steps:

- task: NodeTool@0

inputs:

versionSpec: '16.x'

displayName: 'Install Node.js'

- script: |

npm install

npm run build

displayName: 'Build and Compile'

- task: PublishBuildArtifacts@1

inputs:

pathToPublish: 'dist'

artifactName: 'app'Once committed, the pipeline will trigger automatically on each push to main. That is your entry point into CI/CD.

CI/CD – Continuous Integration, Continuous Delivery

You shouldn’t view CI/CD as a feature but as a habit. That means every commit becomes a release candidate, so code quality is validated constantly. In practice, you stop fearing deployment day. The reason is you’re deploying every day.

Azure Pipelines bolsters this lifecycle by treating your infrastructure as code. You can define pipeline steps declaratively and manage environmental variables, script arguments, and secrets like any other version-controlled asset.

Further, the code delivery can be faster because you’re confident in the progress, not just the result.

Build, test, and deploy

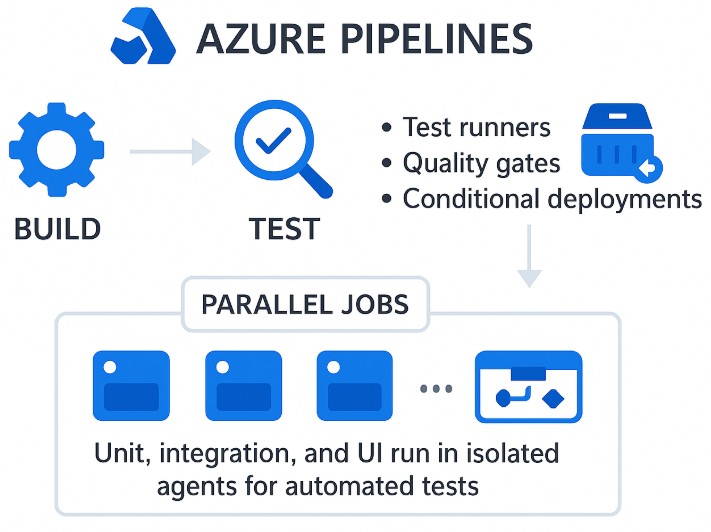

A mature DevOps lifecycle has three pillars – build, test, and deploy. They are hardwired into Azure Pipelines. Based on the success criteria, the pipeline supports:

- test runners

- quality gates

- conditional deployments.

Unit, integration, and UI run in isolated agents for automated tests. You can configure pipeline jobs to run multiple jobs in parallel, shaving minutes off build times and providing feedback faster.

This isn’t a nice-to-have. The build, test, and deploy approach is a survival tactic for teams that ship frequently.

Native container support

Containerized builds are a native feature in Azure Pipelines. They allow you to build Docker images and push them to the Azure Container Registry, as well as deploy containers to Kubernetes clusters or App Services.

Using containers removes variability between build environments. You no longer have to worry about a dependency working locally but failing in production. The entire build and runtime environment becomes versioned and repeatable.

Of course, pipeline steps can also run inside containers, reducing toolchain conflicts and enabling easier reuse across projects.

Be sure to expand your DevOps knowledge further with these guides:

→ How To Transform From Dev to DevOps ↻

→ DevOps Security – Best Data Protection Practices 🛡️

Advanced workflows

No complex pipeline workflow is built from scratch. It evolves. Azure Pipelines support advanced workflows that include:

- matrix builds

- conditional stages

- custom templates

- reusable pipeline script (code).

You can create deployment strategies with canary releases and blue-green deployments. Another option is rolling updates. Integrating external services from the Azure DevOps Marketplace or calling REST APIs from tasks is no problem.

More importantly, you can embed security at every stage, from verifying the integrity of the source code to scanning container images and managing secrets with Key Vault.

Security – a critical oversight

While the Azure Pipelines engine enables powerful automation, it also introduces risk. Especially when CI/CD definitions live only within the Azure DevOps organization and are tied to a specific cloud-hosted target environment (cloud build).

The YAML file defining your pipelines is typically stored in your repos and benefits from version control.

However, the pipeline execution context – agent pools, service connections, secrets, and run history – is outside the repository scope.

That’s where a solution like GitProtect for Azure DevOps becomes handy.

It ensures that the repositories containing your YAML pipelines, scripts, and deployment configurations are continuously secured.

GitProtect for the target environment

The backup tool also captures organization-level data, such as permissions, repositories, and metadata. Thus, if something goes wrong, you can restore critical access structures and codebases.

In other words, the GitProtect ensures the infrastructure-as-code backbone of your DevOps is versioned, encrypted, and recoverable.

Let’s wrap it up – protecting your pipeline infrastructure

The CI/CD process doesn’t begin with runtime. Indeed, it doesn’t end there. While secrets, access tokens, and container registries often get the attention they deserve, the foundations, like the repositories, permissions, and pipeline code, are just as critical.

A compromised or lost repository containing your pipeline YAML definitions is not just an inconvenience. It’s a failure of reproducibility.

In most Azure DevOps workflows, the pipeline logic is stored directly in the version control system, alongside application code. That makes Azure repository backup a necessary condition for safeguarding CI/CD integrity.

However, relying solely on Git itself as your safety net ignores real-world failure modes: accidental deletion, permission misconfiguration, and malicious tampering.

GitProtect in action

This is where we can mention GitProtect for Azure DevOps again, as it’s increasingly relevant. It provides automated and encrypted backups of cloud hosted pipelines and other critical components:

- source code repos (including pipeline YAML files and scripts)

- repository-level metadata

- access permissions

- wikis and documentation linked to code projects.

In other words, it secures the code-defined layer of your pipeline infrastructure. If your DevOps process is truly versioned as code. Then, that code and its underlying infrastructure need proper backup, audit, and restore capabilities.

By backing up the repositories where your pipelines exist, GitProtect helps maintain the reproducibility and recoverability of your CI/CD workflows. It does this even when your Azure DevOps environment encounters human error or external disruption.

Looking to take your Azure DevOps security to a whole new level?

→ [READ MORE] Azure DevOps Backup Best Practices To Keep Your Data 100% Safe 🎓

→ [FREE TRIAL] Take A Leading Azure DevOps Backup Tool For A Spin – Free 14-day trial 🚀