GitHub Storage Limits

Everything has its limits. Certainly, some astronomers, philosophers, or theologians will not agree with me, but in the IT world, this sentence is perfectly correct. What’s more, storage limits are one of the big problems of this industry. And what does it look like in the most popular hosting service? What is the GitHub storage limit? What is the GitHub max file size? Let me use my favorite answer – it depends.

GitHub limits

By default, if the file is larger than 50MB, you’ll get a warning that you may be doing something wrong, but it will still be possible to upload the file. Only the 100 MB threshold is blocked and this is the GitHub file size limit. If you are uploading via browser, the limit is even lower – the file can be no larger than 25 MB.

Of course, these are the default settings, but you can extend these limits and add larger files to the repo. For this purpose, what we call LFS (Large File Storage) was created. It is a feature that allows us to throw much larger files into the repository, and the limits this time depend on our account type.

Let’s leave the sizes of individual files for a moment and check the limits related to the entire repository. How big is the GitHub repository size limit? Well, there is no unequivocal answer or hard threshold here. The recommended and optional repository size is less than 1 GB, while less than 5 GB is “strongly recommended”. However, this is not a hard limit and theoretically, we can exceed it. However, then we can expect contact from support to clarify whether we really need such a large space.

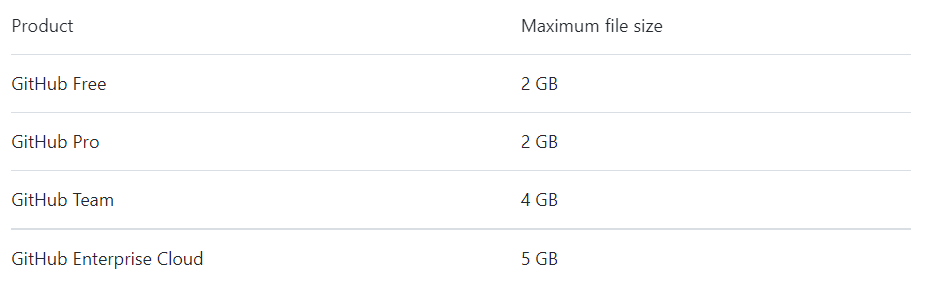

While GitHub recommends keeping repositories under 5 GB, there are defined storage limits based on your plan: GitHub Free and Pro accounts have a 2 GB limit per repository, GitHub Team has a 4 GB limit, and GitHub Enterprise Cloud has a 5 GB limit.

Let us stop here for a moment. If you are reading carefully, the alert now comes on. Why, on the one hand, GitHub recommends the size of the entire repository as less than 1 GB, but on the other hand, the aforementioned LFS allows you to upload a file with a size of 2 GB for the GitHub Free account? Sit back, everything will be clear in a moment.

GitHub Large File Storage

We already know the individual limits, but how does this LFS mechanism work? Well, there’s a little ‘cheat’ here because these big files aren’t really stored in our repository! Let me quote official documentation:

“Git LFS handles large files by storing references to the file in the repository, but not the actual file itself. To work around Git’s architecture, Git LFS creates a pointer file which acts as a reference to the actual file (which is stored somewhere else). GitHub manages this pointer file in your repository. When you clone the repository down, GitHub uses the pointer file as a map to go and find the large file for you.”

Another tricky part of LFS is that by default, it doesn’t matter if you have a paid subscription or not, there are limits. It’s important to monitor your LFS usage, as new files pushed to the repository will be silently rejected if you exceed your storage quota. 1 GB of free storage and 1 GB a month of free bandwidth. What does it mean? Every push of a big file will consume the storage limit. If you push a 500 MB file twice, you will use all of the free storage limit. The Bandwidth is used when the user (or GitHub actions) downloads this file. The limit is used upon every download, so it can run out pretty quickly. Of course, it is possible to purchase larger limits.

Why is my repository so big?

The best answer to the question “how to upload large files to GitHub” is – do not upload large files to GitHub. Simple as that. A common problem is treating the repository as a bottomless bag into which we can put everything. More than once, I have encountered a situation when log files or compiled classes or other – equally unnecessary – binary files were added to the repo. Well, instead of committing external libraries directly, it is better to use a package manager like NPM, Maven, or Bundler to manage these dependencies. There is no clear-cut solution here because the problem depends on the specific situation in a given repo, but the official documentation may be helpful.

The situation is different when it comes to graphic files. It is often a necessary item that simply needs to be in the repository. But here, too, we can optimize by applying compression. And we don’t have to do it ourselves, as there are ready-made apps in the GitHub Marketplace that will do it for us. For example, I recommend Imgbot, which compresses the image files without any loss in quality.

For distributing very large files, such as binaries or datasets, another effective method is to use GitHub Releases. Each file attached to a release must be smaller than 2 GB.

Large files vs. backup solutions

That should be our mantra – make a backup before removing anything from the remote repository. Make a backup before making any changes to the repo configuration. And repeat that over and over again. But here, too, the topic of the size of the repository is of great importance. A “light” repo allows us to operate more efficiently and faster. It also facilitates backup and recovery of the repository after a failure. Smaller repo is beneficial in any situation.

Also, before we start cleaning up our repo, or changing its configuration, it would be ideal to first make a proper backup. For example, GitProtect.io allows you to backup not only GitHub repositories and metadata, but also GitHub LFS, making all your critical assets secure. What’s more, during the restore process you can easily enable or disable LFS restore. I this case, if an event of disaster takes place, you can decide on whether it’s necessary to restore your LFS immediately for workflow continuity or you can wait and restore them later giving priority to other metadata for your team’s continuous coding.

Frequently Asked Questions

How do I check my GitHub storage limit?

To check your GitHub storage limit you have 3 possible options that depend on what account you have: a personal account, an organization, or an enterprise account.

For personal accounts, you should click on your profile picture in the top right corner and then click ‘Settings’. Next, go to the ‘Access’ section and click ‘Billing and plans’, then ‘Plans and usage’. Now, you can view storage details under ‘Git LFS Data’. If you need to check the LFS data usage by repository, simply select ‘Storage’ or “Bandwidth”.

As for an organization, to check your storage limits you should click your profile picture, go to ‘Settings’, and in the ‘Access’ section, click ‘Organizations’. Then, click ‘Settings’ next to your organization. Now, if you’re the owner of the organization, in the ‘Access’ section, you can find ‘Billing and plans’. You should be able to see details regarding your storage under ‘Git LFS Data’. Alternatively, select ‘Storage’ or “Bandwidth” to view LFS data usage by the repository.

With enterprises, click on your profile picture, and then ‘Your enterprises’. You will see the list of enterprises, and you need to select the one you want to check the storage information for. In the enterprise account sidebar, located on the left side of the page, click on ‘Settings’. There click ‘Billing’ and go down to the ‘Git LFS’ section.

What is the maximum number of files in Git?

The maximum number of files in your GitHub repository has no actual established limit. However, you must pay attention to the storage and file size limits.

How long does GitHub store data?

GitHub stores your data like artifacts and log files for a default period of 90 days before automatic deletion. For public repos you can set it between 1 day and 90 days, and for private repos, from 1 day to 400 days. In terms of a repository which was deleted, the retention period is 90 days, then it is permanently deleted.

Additionally, worth mentioning the GitHub Archive Program – an initiative which helps to preserve data for future generations. Public repositories, up to 02.02.2020, are stored in the Arctic Code Vault, where they will be kept for as long as 1000 years.

How to clean up GitHub storage?

To clean up your GitHub storage you should first rebase your repo. Then, you can delete all of the unused branches and squash your commit histories. After that you can perform an aggressive Git garbage collection. Other considerable methods include deleting the local reference of a remote branch. Also, you can use ‘git reflog expire’, which allows you to remove old refs. You can specify, for example, and delete all refs older than 2 months ago.

An important thing to remember when cleaning repos and deleting pieces of it is to have backup copies as you may delete some important information by chance or that data can be important for you in the future (let’s say as a reference one).

How many repos can I have on GitHub?

The amount of repos that you can have on GitHub is unlimited for personal accounts. Whether you are using GitHub Free or GitHub Pro does not matter, the amount of public and private repositories that you can own is unlimited.

As for managed user accounts or organization ones, it is dependent on several factors in terms of policies, security and management. Some organizations may limit the number of repos a user can create.

How to bypass GitHub’s 100MB limit?

To bypass GitHub’s limit of 100 MB you can use Git Large File Storage (Git LFS). It stores references to your file in the repo by creating a pointer file. However, the actual file is going to be stored at a different location. When cloning your repository, your pointer file that is managed by GitHub, serves as a map that helps GitHub locate your large file.

With GitHub Free & Pro the maximum file size is 2 GB, for GitHub Team it’s 4 GB and for GitHub Enterprise Cloud – 5 GB.

How big is the storage limit on GitHub vs GitLab?

The storage limit for GitHub and GitLab is different and dependent on repository type. In terms of free accounts, GitHub has unlimited storage for public repos, and 500 MB limit for private ones. In contrast, GitLab’s limit is 5 GB for both private and public repositories.

Before you go:

✍️ Subscribe to GitProtect DevSecOps X-Ray Newsletter and stay informed about the latest developments in DevSecOps

🔎 Find out what duties regarding the security you have within the GitHub Shared Responsibility Model

📚 Learn about the importance of data retention policies in DevOps backup and recovery

🔎 Discover which GitHub security best practices to follow to ensure that your repositories and metadata are safe

📅 Schedule a live custom demo and learn more about GitProtect backups for your GitHub repositories and metadata

📌 Or try GitProtect backups for the GitHub environment and start protecting your critical GitHub data in a click

The article was originally published on January 27, 2022