The Importance of Data Retention Policies in DevOps Backup and Recovery

How long should an IT company keep its backups? This question pops up in the head of every Security and IT leader as soon as the company faces the need to follow its legal compliance. Though, not only laws and regulations make companies retain specific types of data during a certain period of time.

Data retention policies in terms of DevOps backup

When it comes to the definition of the data retention policy, we should remember about three unchanged components a data retention policy includes:

- what DevOps data your organization should store, backup or archive,

- where this sensitive data, excess data, and other data are supposed to be kept,

- and how long your DevOps data should be stored – in other words: over what period of time you might need to recover them.

DevOps teams need to analyze and determine the appropriate data retention policy for their backups (as it will help them to bring some sanity to their data management). To do so, they need to take into account a few factors to ensure: compliance requirements, recovery objectives, and cost considerations.

Metrics that influence retention

Recovery Point Objective (RPO) and Recovery Time Objective (RTO) are usually the key metrics that influence the choice of a company’s retention policy.

The RPO is the maximum acceptable time gap between the last data backup and the amount of data the company can lose (yet keep its workflows continuous) between those backups. For measuring your RPO, you need to determine and identify all the critical DevOps data, the amount of data your company can afford to lose but keep its working processes steady.

The RTO is the maximum acceptable time that it takes to identify the problem, remediate it and restore your organization’s data after the event of failure. For example, if you set your RPO of one hour and an RTO of five hours, it means that your data retention policy specifies your backups are performed every hour, and the backups are retained for at least five hours.

Compliance Requirements

To be competitive organizations usually pass security audits and follow strict security regulations. Thus, to comply with such requirements a company needs to set its retention policy to meet the standards, such as SOC 2 Type II , ISO 27001, HIPAA, etc.

For example, according to HIPAA “documents must be retained for a minimum of six years from when the document was created, or – in the event of a policy – from when it was last in effect.” The same time period (six years) is the guideline period for most types of data protection regulation GDPR (General Data Protection Regulation), which concerns those companies that process or store the personal information of EU citizens.

Find out all you need to know about regulatory compliance requirements in our blog post on GitHub Compliance.

Cost Considerations

Longer data retention periods may require more storage space and, consequently, increased storage costs. On the other hand, shorter retention periods may increase the risk of data loss, and hence, financial loss. That’s why it is important to find a balance between those considerations to make your data retention policy cost-effective.

Legal automated compliance

It guarantees that the organization is complied with legal obligations and regulates the storage of different types of data by the established policy.

Reduced storage costs

Storing and managing data can be expensive, especially if the data volumes continue to grow (and we know that data grows in progression!). With an effective data retention policy, the company can control costs by reducing the amount of data it needs to store and manage.

Operational efficiency

When a company has a clear retention policy, it can manage its data more efficiently. Organizations can avoid storing unnecessary data if they know the exact terms for disposing of the data. It can for sure improve performance and reduce storage requirements.

Risk management

Retaining data for longer than necessary can increase the risk of data breaches, which leads to unnecessary financial loss. Having a good data retention policy can help reduce this risk by ensuring that the data is disposed of when it is no longer needed.

Find out about security incidents GitHub faced in 2022 and Atlassian faced in 2022 in our blog posts.

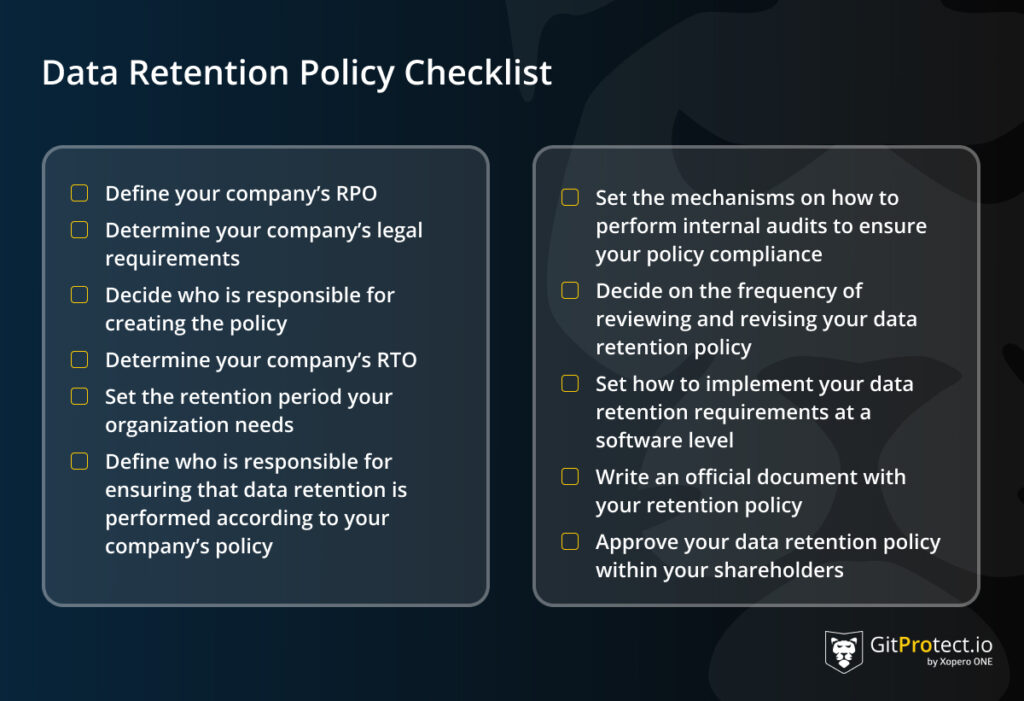

Data Retention Policy Best Practices

We have already mentioned that there is no one-size-fits-all approach to building your company’s Data Retention Policy. As in any other industry, in DevOps, data retention is aimed at data being stored for the right amount of time, and it is accessible and available at any time. Here are the best practices for building a Data Retention Policy in terms of DevOps backup:

Determine legal requirements

The first thing the organization should do is to determine the laws and regulations that govern its data retention requirements. Thus, the company will understand which requirements it needs to incorporate into its own data retention policies.

Determine the retention period

Next, it’s important to identify what data needs to be retained and an appropriate data retention period for your organization. Depending on the type of data and legal and regulatory requirements, it will vary.

Not to overload your storage system with copies, you can archive data that is no longer actively used by your company, yet still needed for long-term retention. Though, you shouldn’t mix up these two terms as backup and archive are absolutely different things.

| Backup | A backup is a compressed, deduplicated copy to some other storage location to restore in the event of failure, data loss, damage, error, or corruption. | A backup is used to restore data to its previous state in the event of failure to eliminate downtime and resume business processes. Backup is an obligatory software to meet any security and legal regulations. |

| Archive | An archive isn’t a copy, but some inactive historical data that the company needs to keep due to regulatory compliance requirements. Thus, you can move such data to secondary or tertiary storage according to your business requirements. | Archives are used for long-term retention to meet legal or compliance reasons. |

Automate the process

Consistency and efficiency – that’s what you get after automating data retention policies. You can do it with the help of automation and backup tools, like GitProtect.io or manual scripts to manage the data retention process.

You can read more about DevOps CI/CD pipelines in our other blog posts: Bitbucket CI/CD, GitHub CI/CD, and GitLab CI/CD.

Store your data securely

Be sure that all your DevOps data is stored securely during the data retention period. For that reason, you should be sure that your storage corresponds to international security standards and is WORM-compliant. At the same time, you should use appropriate encryption, access controls, and data backup mechanisms to ensure your DevOps data isn’t compromised.

Test the data recovery processes

To be sure that your data is easily recoverable in any event of failure, you should test your data recovery process on a regular basis. This testing should include:

- testing backups

- restoring data

- verifying that the data is intact.

Document the policy

Documenting the data retention policy will help your team to understand their responsibilities within your data retention policy. That’s why proper communication of your retention strategy is an important aspect.

Review and update the policy regularly

By reviewing and updating your data retention policy on a regular basis, you will be sure that your strategy meets regulatory requirements and organizational needs.

GitProtect.io’s DevOps Backup and Recovery features for your Data Retention Policy

We have already explained what a data retention policy is and what best practices to follow to build one that meets and fulfills all your organization’s requirements. Now let’s look at the DevOps backup features you need to pay attention to make your critical DevOps data accessible and recoverable in any event of failure.

Automated backups

To enhance your DevOps time efficiency, your DevOps backup solution should be automated. Your DevOps team should have the possibility to simply schedule backups, ensuring that no data is lost due to a human error or a system failure.

Actually, a backup schedule is one of the most crucial elements when it comes to creating a backup plan. You should have the possibility to create full, differential, and incremental copies of your GitHub, Bitbucket, GitLab, or Jira environments, for example using a Grandfather-Father-Son (GFS) rotation scheme. It will help to control your storage space without overloading it.

Another important aspect here is the possibility to control your rotation scheme by keeping your backups infinitely, by time, or by the number of copies.

Are you switching to a DevSecOps operation model? Remember to secure your code with the first professional GitHub, Bitbucket, GitLab, and Jira backup.

Flexible retention

When you choose a backup software for your DevOps environment, retention settings can be a game-changer feature. Sometimes organizations have to keep their data for years to meet their compliance, legal, or industry requirements. Thus, your backup solution should permit you to set different retention opportunities for every backup plan.

For example, with GitProtect.io you can indicate retention by the number of copies you want to keep or the time each copy should be kept in the storage; what’s more, you can disable the rules and keep your backup copies infinitely.

Redundancy

To prevent data loss from any event of failure, it’s a good idea to keep your critical business data in different locations. The best way here is to follow the 3-2-1 backup rule, within which you have no less than 3 backup copies in two (or more) different storage instances, one of which is offsite.

Encryption

Data retention means that you need to store data for a long time. That is why to eliminate the consequences of data breaches and safeguard it against ransomware you should consider having your data encrypted in-flight and at rest. Moreover, to level up your DevOps backup protection, it’s good when your backup provider permits you to create your own encryption key. In this case, you are going to be the only person who knows the key.

Monitoring center

Your backup solution should permit you to monitor your backup performance easily from a single place. Data-driven dashboards, visual statistics, real-time actions – all those features should save your DevOps time. Moreover, you should get notifications (email, Slack, advanced audit logs) on how the backup is performed.

Restore and Disaster Recovery

You need retention to get back to the previous copy due to different reasons: to make correct unintentional repository or branch deletion, and to get back to coding fast after an event of failure. And we can make this list longer. Though, the most important is which mechanisms you can use to resume your working processes fast eliminating any possible downtime. Thus, your backup and DR provider should provide different recovery opportunities for your business continuity, such as point-in-time restore, granular recovery, restore to your local device, or cross-over recovery to a different Git hosting platform (for instance, from GitLab to GitHub, and vice versa), granular recovery, and easy data migration.

GitProtect.io and its retention feature

The majority of vendors offer from 30 to 365 days of retention, which obviously isn’t enough. With GitProtect.io’s comprehensive DevOps backup and Disaster Recovery solution, you can forget about any limits. It provides its customers with unlimited retention. Thus, you can keep your archived old unused repositories up to forever to meet your compliance.