Hacking AI vs. DevOps security – how attackers impose their will on AI and how to protect your DevOps data

Let’s plunge into the intricacies of AI vulnerabilities, the ways cybercriminals use AI, and the significance of DevOps security. Find out which practices can help you protect your data… small hint, backup is among them. Test a DevOps backup solution for free during a 14-day trial period.

DevOps teams rely a lot on AI.. and here we speak not only about AI coding assistance tools… It boosts their productivity, saves time, and the list goes on and on. However, what about the risks organizations can run into taking AI for granted? Can it lead to data breaches or numerous vulnerabilities? In its recent report, 2024, cybersecurity, risk, and privacy predictions, Forrester warns that being so eager to improve its productivity using AI, DevOps can face challenges and data breaches: “At least three data breaches will be publicly blamed on AI-generated code.”

AI in DevOps processes

AI development assistants are gaining more and more popularity among DevOps. According to the already-mentioned Forrester report, 49% of businesses and technology professionals have already been piloting and implementing AI-coding assistants. And, in its 2023 Global DevSecOps Report GitLab states that 65% of “developers said that they are using artificial intelligence and machine learning in testing efforts or will be in the next three years.” Moreover, the Gartner survey finds that no less than 79% of corporate strategists recognize AI as a critical aspect of their success over the next few years. So, what makes the integration of AI tools into the DevOps processes so attractive?

Just in a few words: automation, improved efficiency, and reduced costs. AI is making its mark across the software lifecycle, enhancing everything from code creation to deployment, ensuring that speed does not compromise quality or security. Thus, the key benefits of integrating AI tools into the development process can be:

Predictive analysis to forecast challenges

By sifting through vast amounts of logs, metrics, and other data, AI algorithms can identify patterns and anomalies faster. Thus, DevOps teams can foresee potential system failures or bottlenecks before they escalate into major issues. For instance, by analyzing past incidents and their triggers, AI can predict when a similar event might occur, enabling teams to take preemptive action.

Automation for productivity and efficiency

The essence of DevOps lies in automation, and AI elevates it to new heights. AI-driven automation tools can optimize the deployment pipeline, ensuring code is tested, integrated, and deployed with unprecedented speed.

For example, GitLab has already implemented AI into its platform. Thus, dev teams can write and deliver code more efficiently by simply viewing code suggestions while typing. Moreover, the vendor has recently presented GitLab Duo which enhances the software development lifecycle, integrating AI-driven tools directly into the DevSecOps platform to streamline workflows and boost efficiency. GitHub also has its AI-powered coding assistant – GitHub Copilot, which supports a wide range of frameworks and programming languages. Atlassian provides its AI-driven virtual teammate which is aimed at accelerating teamwork.

What’s next – GenAI?

For sure, developers will continue implementing AI-assisted software into their DevOps lifecycles. In its Top Strategic Technology Trends for 2024, Gartner predicts that by 2026 “over 80% of enterprises will have used GenAI APIs and models/or deployed GenAI-enabled applications in production environments.” Thus, software engineers will be able to devote more time to strategic activities and less time they will spend on writing the code. However, what about the risks of implementing GenAI into the development process?

“GenAI presents an opportunity to accomplish things never before possible in the scope of human existence,” said Daryl Plummer, Distinguished VP Analyst at Gartner. “CIOs and executive leaders will embrace the risks of using GenAI so they can reap the unprecedented benefits.”

Source: Gartner’s Press Release: Gartner Unveils Top Predictions for IT Organizations and Users in 2024 and Beyond

AI Vulnerabilities & Offensive AI threaten your data

While DevOps use AI to boost productivity, efficiency, and speed, their source code still can have vulnerabilities. However, AI-driven tools are powerful, they are still innovative and need a lot of attentiveness from the DevOps side. Haven’t you ever been typing any line and after re-reading your text noticed typoes or any other kinds of mistakes? Dare to say that it had a place to be… It can lead to vulnerabilities which, consequently, attackers may use against you. Moreover, due to its novelty, AI definitely has some vulns. So, now let’s get down to the nitty-gritty aspect of how cyber criminals use AI and impose their will on it…

How cybercriminals attack AI

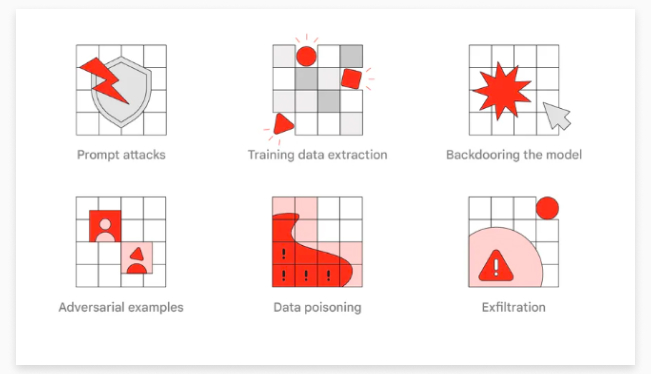

Some time ago Google created a ‘red team’ to explore how malicious actors could attack AI systems. In their report, they outlined the most common tactics, techniques, and procedures (TTPs) that attackers use against AI systems:

Source: Common types of red team attacks on AI systems

Let’s go through them one by one:

Data poisoning

Beyond real-time manipulations, AI systems face threats from data poisoning, where malicious data is introduced into the training sets, leading the AI to make incorrect or biased decisions. This covert risk can have extensive repercussions, often becoming apparent only after significant damage has occurred. Targeted data poisoning and clean-label attacks, where the poisoned data is subtly mislabeled, represent sophisticated strategies that attackers may employ to compromise AI systems.

Prompt attacks

Using their creative prompts, attackers can instruct large language models (LLMs) which power GenAI products and services to perform their instructions. For example, cybercriminals can include malicious instructions in a phishing email, that will trick AI mail tools into defining it as a legitimate one. “By including instructions for the model in such untrusted input, an adversary may be able to influence the behavior of the model, and hence the output in ways that were not intended by the application.” – states Google AI Red Team’s report.

Adversarial attacks

AI systems, even sophisticated ones like advanced image classifiers, are susceptible to adversarial attacks. A notable example is an image classifier that, with slight alterations to an image of a panda, can be misled into identifying it as a gibbon. These subtle manipulations, often imperceptible to the human eye, highlight the susceptibility of AI systems to deception.

Training data extraction and security

The security of training data is important, as attackers may target AI systems to extract or exfiltrate this data, posing additional threats to data privacy and security. Employing techniques such as differential privacy in training data and secure multi-party computation for training models on encrypted data can enhance the security of AI systems.

Backdoor attacks

In this case, an attacker tries to secretly change the behavior of the AI model to generate inaccurate outputs by using certain “trigger” terms or characteristics. They can achieve it in different ways: by directly changing the weights of the model, or by changing the model’s file representation.

Exfiltration

During a simple exfiltration attack, the attacker copies the model’s file representation. Sometimes, if the cybercriminals have more resources, they could try to use more sophisticated techniques, including querying a particular model to find out its capabilities and then utilizing that data to create their own.

The other side of the coin – AI abuses & misuses

Cybercriminals use AI to find vulnerabilities in organizations’ security systems and create new malware. What’s more, by using AI, bad actors can test their attack techniques and alter their attack strategies in real time, which gives them a significant advantage and introduces new threats.

Moreover, it’s bad actors’ common strategy to attack vulnerabilities of AI and ML models designed to identify and baffle breach attempts. There are a few ways cybercriminals can use AI to launch their cyber attacks:

AI-supported password cracking

Bad actors do their best to employ AI and ML and improve their algorithms for guessing users’ passwords. According to the Home Security Heroes report AI can easily crack commonly used passwords. They used PassGAN, and AI cracker, and ran it through the list of more than 15M passwords. As a result, 51% of common passwords can be cracked in less than a minute, and only a month they need to guess 81% of commonly used passwords.

Source: Home Security Heroes report

AI impersonation

Cybercriminals can abuse AI to imitate human behavior successfully tricking bot detection systems on social media platforms and communities. Thus, by imitating human-like use patterns, threat actors can then profit from the infected system to create fraudulent streams for a particular artist through the mentioned AI-supported impersonation.

AI-generated phishing emails

We all read tens of emails every day. You can get email notifications from apps you’re using, or colleagues. And what if one of those emails will be a phishing one? Yeap.. they usually contained mistakes and were easy to spot, but AI now helps malicious actors generate well-written and convincing content for phishing scams.

Moreover, using AI cybercriminals can also personalize emails by imitating tone, language, and style. Thus, they can easily place ransomware and infect your data.

Deepfakes

Malicious actors can misuse AI to craft fake forms of media. This way, they can spread false information. For example, if you’re using some templates created by the developer and influencer you trust, then you see that he or she makes a video about some improvements and updates, and shares a link with you, will you use it? More yes, than no! By following the link you can run into ransomware, that’s it… the cybercriminal reached his goal.

AI-supported hacking

It’s not a secret that since its appearance the Internet has had some vulnerabilities, thus there are always new patches, developments, and improvements in this niche. Cybercriminals like using those vulns to get their gains and with the development of AI it can be easier. Hence, threat actors weaponize AI frameworks to help them hack vulnerable hosts.

How to mitigate the risks?

To navigate these turbulent waters, a comprehensive approach is required. This involves not just technical safeguards, but also establishing a framework that ensures accountability and transparency in AI systems. It’s about creating a culture where security is at the very first place, and every stakeholder understands their role in protecting the integrity of AI. This includes regular audits, ensuring data quality, and maintaining a vigilant stance against potential threats. By doing so, the company can fortify its defenses, making AI a reliable ally.

Secure coding and code reviews

Developers need to write code with security in mind from the outset, which includes validating inputs to prevent injection attacks, handling errors securely to avoid exposing sensitive information and ensuring secure communication between different parts of the application. Peer reviews and automated security scanning are essential in this process, helping to identify potential security issues and improve code quality.

Train developers and utilize resources

Regular training sessions keep developers updated on the latest security threats and best practices, empowering them to contribute actively to the organization’s security posture. Utilizing well-maintained and trusted libraries and frameworks also plays a significant role in reducing the risk of vulnerabilities.

Use secure API

When integrating external services or data, developers must ensure secure API use, validating and sanitizing any received data to prevent security breaches.

Ensure the security of CI/CD pipeline

Protecting the CI/CD pipeline is paramount. This includes securing code repositories, ensuring the integrity of the deployment process, and conducting continuous vulnerability checks in the codebase and dependencies as an integral part of the build process. Additionally, focusing on environment protection and artifact integrity helps in safeguarding the application before and after it goes live.

Keep up with governance, compliance, and industry standards

To maintain a secure DevOps environment, it is imperative to integrate robust governance structures with adherence to industry standards and compliance requirements. This ensures a secure development lifecycle, consistent policy enforcement, and clear role definitions, all while aligning with recognized security benchmarks for comprehensive coverage throughout the software development and deployment phases.

Key industry standards such as ISO 27001, SOC 2, PCI DSS, GDPR, and HIPAA provide a clear roadmap for secure operations, and their integration into DevOps practices is facilitated through automated compliance checks within the CI/CD pipeline. This ensures continuous monitoring, prompt identification, and addressing of any deviations, enhancing the security measures in place.

Human supervision

Human oversight in AI integration within DevOps is essential to ensure a balanced and secure digital environment. While AI enhances efficiency in data processing and decision-making, human supervision is crucial, particularly when AI’s decision-making is opaque or in critical scenarios.

Humans bring ethical understanding, accountability, and common sense, ensuring AI operates within ethical boundaries and aligns with organizational objectives. This oversight helps in making strategic decisions, maintaining a robust security posture, and mitigating risks associated with AI vulnerabilities.

By integrating human insight with AI capabilities, organizations can optimize their processes, safeguard against potential vulnerabilities, and ensure responsible and secure technology use. This approach ensures that AI-driven automation serves the organization’s objectives while maintaining a secure and ethical environment.

Secure data handling and encryption

All sensitive information, whether in transit across networks or at rest in databases, must be encrypted using robust protocols such as TLS for data in transit and AES for data at rest. Key management is a critical aspect of this process, necessitating the use of secure key management systems and practices, including regular key rotations and using hardware security modules (HSMs) for storing encryption keys securely.

Network security and segmentation

Network security is foundational to safeguarding the DevOps and AI ecosystem. Implementing network segmentation is a strategic move, isolating critical parts of the network to contain potential breaches and minimize lateral movement of threats. Employing secure communication protocols ensures that data is not only encrypted in transit but also authenticated, maintaining data integrity and confidentiality.

To proactively defend against network threats, continuous monitoring of network traffic is essential. Intrusion detection systems (IDS) and intrusion prevention systems (IPS) should be deployed to analyze network traffic for signs of malicious activity, enabling timely detection and response to potential threats. Additionally, implementing security groups and access control lists (ACLs) helps in defining and enforcing network access policies, ensuring that only authorized entities can communicate within the network.

Backup of your environment

Regular backups can help to overcome unpleasant situations, like outages and ransomware attacks. We shouldn’t forget that backup is a final line of defense. Hence, to work successfully and guarantee your company’s data protection, your backup should follow backup best practices, including automated scheduled backups of all your data, the possibility of choosing storage, unlimited retention to keep your data for as long as you need, the 3-2-1 backup rule fulfillment, encryption, and ransomware protection.

You can read more about backup best practices:

📌 GitHub backup best practices

📌 Bitbucket backup best practices

📌 GitLab backup best practices

📌 Jira backup best practices

Conclusion

As we stand on the cusp of a new era in technology, the fusion of AI and DevOps promises to redefine the way we develop, deploy, and interact with digital solutions. AI algorithms will become more sophisticated, capable of self-healing and adapting to threats in real-time. However, attackers too will harness advanced AI, leading to an escalated arms race in cybersecurity. DevOps practices will further embrace edge computing and decentralized systems, spreading data across multiple nodes and potentially reducing single points of failure but also increasing the attack surface.

One principle remains constant – the importance of continuous learning and adaptation. Security isn’t a one-time measure but an ongoing commitment. As threats evolve, so must our defenses. Organizations, developers, and security professionals alike must foster a culture of vigilance, always staying informed, always adapting, and always anticipating the next challenge. The fusion of AI and DevOps holds immense potential, but realizing this potential safely and securely is a journey, not a destination.

Don’t play hide-and-seek with cybercriminals – take proactive measures and protect your data.

[FREE TRIAL] Ensure compliant DevOps backup and recovery with a 14-day trial 🚀