The State of Azure DevOps Threat Landscape – 2024 In Review

The year 2024 is over, so it’s time to sum up what threats were the most dangerous for DevOps and PMs. Outages, degraded service performance, vulnerabilities, cyberattacks, ransomware – all of those were appearing in media headlines all year round. Thus, for the third year in a row, we’ve decided to analyze incidents related to Git hosting services, like Azure DevOps, GitHub, GitLab, and Atlassian.

Our first article in a DevOps threat landscape series is dedicated to Azure DevOps. Let’s dive into the news, reports, and Azure DevOps status to see which threats Azure DevOps users had to deal with in the previous year.

DECEMBER

Azure DevOps Status: 3 incidents

⚠️ Incidents that had a degraded impact – 3 incidents

⌛ Total time of incidents with degraded performance – 39 hours 21 minutes

In December some Azure DevOps users in Europe and Brazil experienced 3 incidents with degraded impact, totaling almost 40 hours.

Those 3 cases of degradation performance were impacting Azure DevOps services, including Boards, Repos, Pipelines, Test Plans, Artifacts, and other services.

NOVEMBER

Azure DevOps Status: 12 incidents

⚠️ Incidents that had a degraded impact – 12 incidents

⌛ Total time of incidents with degraded performance – 19 hours 55 minutes

Azure DevOps experienced 12 incidents in November. All of the incidents Microsoft classified as degraded impact totaled around 20 hours of disruption.

The most significant incident occurred on November 18th, impacting North European users for over 6 hours, who had difficulties accessing Boards, Repos, Pipelines, and Test Plans.

Other incidents were shorter, targeting different regions, including Asia Pacific, the United States, Australia, and Brazil.

Among the services that were impacted in November were pipelines, boards, repos, and other core services.

OCTOBER

Azure DevOps Status: 7 incidents

⚠️ Incidents that had a degraded impact – 7 incidents

⌛ Total time of incidents with degraded performance – 42 hours 55 minutes

In October Azure DevOps users globally experienced 7 degraded incidents with a total time for disruptions of over 40 hours.

The incidents included brief availability degradations across test plans, boards, repos, pipelines, and other services.

SEPTEMBER

Azure DevOps Status: 6 incidents

⚠️ Incidents that had a degraded impact – 6 incidents

⌛ Total time of incidents with degraded performance – 168 hours 34 min

In September Azure DevOps faced 6 degraded incidents, totaling around 168 hours of disruption… almost 7 days! The most prolonged incidents included a 93-hour 28-minute issue that affected connections in Pipelines.

Other smaller incidents impacted Artifacts and other Azure DevOps services.

AUGUST

Azure DevOps Status: 5 incidents

🚨 Incidents that had a major impact – 1 incident

⌛ Total time of incidents with unhealthy performance – 1 hour 21 minutes

⚠️ Incidents that had a degraded impact – 4 incidents

⌛ Total time of incidents with degraded performance – 11 hours 8 minutes

Azure DevOps users could experience 5 incidents of different severity in August. The total time of disruption compounds 12 hours and 29 minutes.

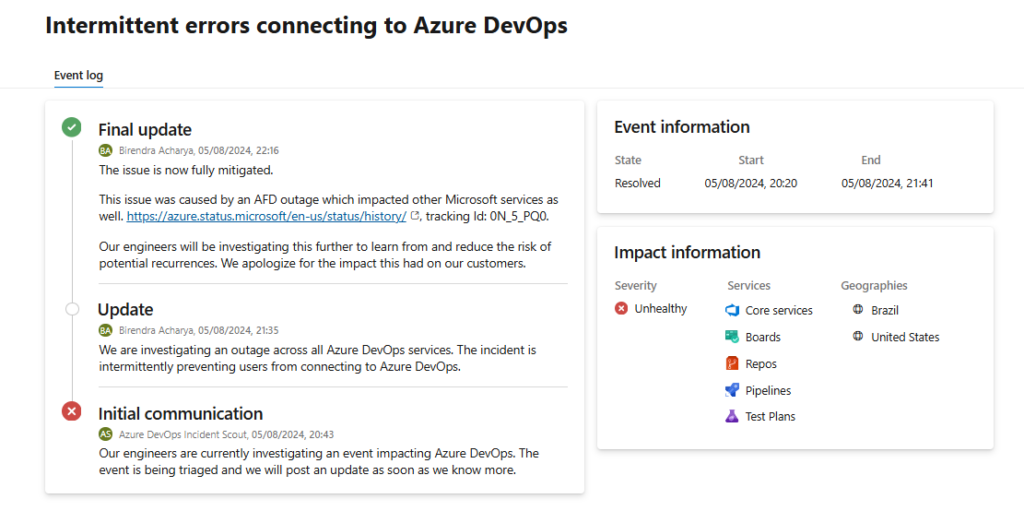

The major incident, lasting 1 hour and 21 minutes on August 5, caused intermittent errors across core services like Boards, Repos, and Pipelines in Brazil and the United States.

Services across North and Latin America are taken down due to Microsoft Azure outage

Multiple Azure customers across North and Latin America were taken down due to a Microsoft Azure outage. The company said that the incident impacted services that leverage Azure Front Door (AFD).

As Microsoft stated, the incident happened due to “a recent configuration change made by an internal service team that uses AFD. Once that change was understood as the trigger event, we initiated a rollback of the configuration change which fully mitigated all customer impact.”

Source: Azure DevOps Status

As a result, for around 2 hours Azure DevOps users reported errors connecting to Azure services and logging problems.

This outage followed another massive incident, an Azure outage, that lasted for 9 hours and impacted many Azure and Microsoft 365 services. The reason for that 9-hour outage was a volumetric TCP SYN flood DDoS attack which targeted many Azure Front Door and CDN sites.

JULY

Azure DevOps Status: 22 incidents

⚠️ Incidents that had a degraded impact – 22 incidents

⌛ Total time of incidents with degraded performance – 376 hours 08 minutes

In July, Azure DevOps users around the world saw 22 incidents, all with degraded impact, resulting in a total disruption time of more than 200 hours (more than 8 days!).

The key incidents included an over 275-hour-long disruption affecting test case order changes across multiple regions and a 38-hour availability degradation in Service Hooks in SEA.

Other incidents were affecting core Azure DevOps services like Boards, Pipelines, Test Plans, and Repos across various regions, including Europe, South and North America.

Storage incident in the Central US impacted Azure DevOps users all over the world

On July 18th Azure DevOps users might experience issues accessing their Azure DevOps organization.

In their post-mortem, Microsoft explained that “This outage lasted roughly from 21:40 to 02:55 UTC. During this outage, customers may have experienced a range of impact from a full outage of their organization to partial degradation with certain functionality unavailable such as Artifacts, Packaging, Test, etc.”

The outage was caused by VM availability in the Central US region and led to all Azure DevOps organizations located in that region being inaccessible. For over an hour, Azure DevOps users were receiving a 503 “Service unavailable” message when they were trying to access their Azure DevOps instances. Moreover, around 1.4% of organizations outside of the Central US region might also be experiencing some kind of performance degradation at that time as well.

Source: Azure DevOps post-mortem: request failure rates

JUNE

Azure DevOps Status: 15 incidents

⚠️ Incidents that had a degraded impact – 15 incidents

⌛ Total time of incidents with degraded performance – 21 hours 30 minutes

The incidents that Azure DevOps users might face in June were all of degraded severity with the total time of disruption of 21+ hours.

The most significant incident was a 7-hour 26-minute service connection degradation affecting Pipelines.

Other incidents were much shorter and impacted such Azure DevOps services as Boards, Pipelines, Repos, and Test Plans.

Ten Azure services, including Azure DevOps, Azure, Azure Load Testing, Azure API Management Application Insights, etc. were found vulnerable to a bug that allowed an attacker to bypass firewall rules and gain unauthorized access to cloud resources by abusing Azure Service Tags.

A researcher from the cybersecurity firm Tenable stated:

“This vulnerability enables an attacker to control server-side requests, thus impersonating trusted Azure services. This enables the attacker to bypass network controls based on Service Tags, which are often used to prevent public access to Azure customers’ internal assets, data, and services.”

While there’s no evidence of this vulnerability being exploited in the wild, Microsoft advises customers to review their use of Service Tags, strengthen validation controls, and implement robust authentication to ensure only trusted traffic is allowed.

In its guidance issued on June 3rd, 2024, Microsoft states that “Service tags are not to be treated as a security boundary and should only be used as a routing mechanism in conjunction with validation. […] Service tags are not a comprehensive way to secure traffic to a customer’s origin and do not replace input validation to prevent vulnerabilities that may be associated with web requests.”

The Hacker News / Bleeping Computer

MAY

Azure DevOps Status: 10 incidents

⚠️ Incidents that had a degraded impact – 10 incidents

⌛ Total time of incidents with degraded performance – 18 hours 38 minutes

In May, Azure DevOps encountered 10 incidents with a degraded impact, amounting to a total disruption time of over 18 hours.

Users from all geographies might experience disruptions while working with Boards, Repos Pipelines, and other Azure DevOps services. The longest incident that month, lasting 6 hours and 56 minutes, involved failures in Pipelines due to Workload Identity federation-based ARM service connections.

APRIL

Azure DevOps Status: 12 incidents

⚠️ Incidents that had a degraded impact – 12 incidents

⌛ Total time of incidents with degraded severity – 41 hours 25 min

Users of Azure DevOps in different locations might experience 12 incidents with degraded impact, varying from a few minutes to hours. Summing up, Azure DevOps experienced more than 42 hours of disruptions in April.

The longest incident lasted over 19 hours. During that time Azure DevOps users all around the world might experience slowness and disconnections from the service, impacting Boards, Repos, Pipelines, Artifacts, etc.

Failures and slowness across Azure DevOps in all geographies

On April 25th, Azure DevOps users all around the world might experience service availability degradation – the slowness and intermittent failures across the service. The issue stemmed from a severe performance regression in a frequently used stored procedure within the Token Service database, increasing execution time by nearly 5000x.

Source: Azure DevOps post-mortem: Global request failure rate %

As Microsoft stated in its post-mortem later “The incident was caused by a significant regression in the performance of a stored procedure with a high frequency of calls in the Token Service.”

Among the affected Azure DevOps experiences were Azure Pipelines with an average delay of over 3 minutes, Personal Access Token (PAT) usage, and increased failure rates for Azure DevOps extensions, with the global request failure rate peaking at around 20%.

Reduced availability of Azure DevOps services across the globe

Some Azure DevOps users were experiencing network-related errors, unexpected cancellations, and slowness while they were trying to access large files over HTTPS for a few days (April, 22nd – April 24th). That led to reduced availability of Azure repositories, boards, and pipelines.

As Microsoft explained in its post-mortem, the reason turned out to be an update that the service provider rolled out sometime prior:

“As part of ongoing work to make Azure Front Door more reliable, the team rolled out an update, which included a specific change for how packets are processed. Due to this change, some traffic was not processed correctly, which triggered clients to resend the packets, and this led to higher latency. As such, this issue primarily affected scenarios with large downloads and responses.”

Azure DevOps engineers quickly detected and addressed the issue.

MARCH

Azure DevOps Status: 7 incidents

⚠️ Incidents that had a degraded impact – 7 incidents

⌛ Total time of incidents with degraded performance – 36 hours 22 min

In March 2024, Azure DevOps experienced seven incidents resulting in degraded service across various regions, totaling over 36 hours of disruption.

The most significant issue happened on March 27–28, when Analytics dashboard widgets failed to load globally for over 22 hours.

Other incidents included availability degradations in Central US, West Europe, and the UK, affecting Boards, Repos, Pipelines, and Test Plans. The artifacts were also impacted twice, showing degraded performance in West Europe and the Central US region.

FEBRUARY

Azure DevOps Status: 12 incidents

⚠️ Incidents that had a degraded impact – 12 incidents

⌛ Total time of incidents with degraded performance – 48 hours 45 min

Azure DevOps experienced 12 incidents which led to over 48 hours of degraded service across multiple regions in February 2024.

The most prolonged disruption lasted over 26 hours, affecting extensions in the United States.

Europe saw the highest concentration of issues, with multiple availability degradations impacting Boards, Repos, Pipelines, and Artifacts, particularly in West Europe.

Other incidents included pipeline delays in the UK, a global issue preventing Pipeline Artifact downloads, and deprecated pipeline tasks becoming unusable.

Critical Azure DevOps flaw could allow remote code execution

Microsoft addressed 73 security vulnerabilities and 2 actively exploited zero-days in February. Among the patches for different Microsoft products, there was one for Azure DevOps Server to address the CVE-2024-20667 security flaw tagged as “important” within the severity level.

Using the vulnerability, an attacker could run unauthorized code on the user’s Azure DevOps server and potentially compromise sensitive data or disrupt the user’s services. However, there should be specific conditions for an attacker to succeed in an attack within this vulnerability:

“Successful exploitation of this vulnerability requires the attacker to have Queue Build permissions and for the target Azure DevOps pipeline to meet certain conditions for an attacker to exploit this vulnerability,” is stated in Microsoft’s security updates.

The February updates are essential for users to protect their Azure DevOps Server against this remote code execution bug.

JANUARY

There is no full information available about the incidents in January.

Conclusion

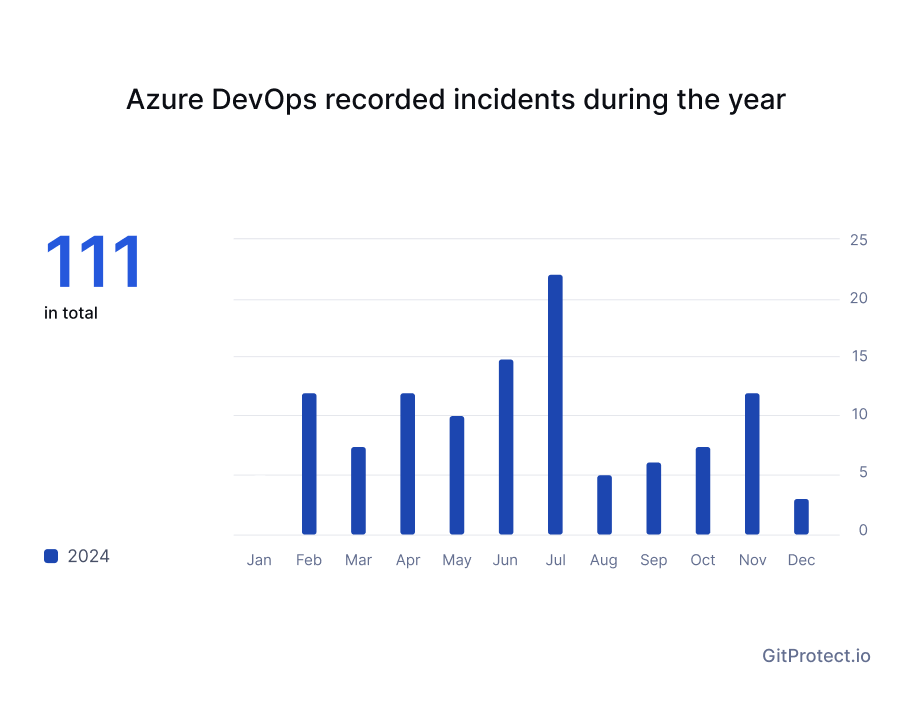

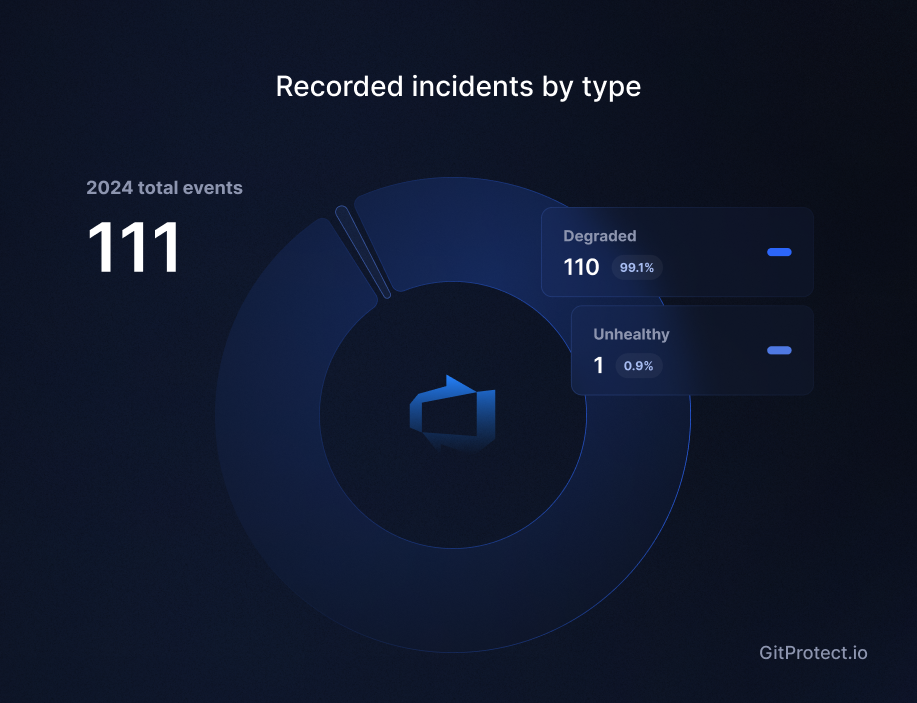

After analyzing all the events that impacted Azure DevOps and thoroughly going through the Azure DevOps Status (here we managed to analyze only the period from February 2024 to December 2024), we counted that the service experienced 111 incidents of degraded performance, which gives us the total time of disruption – 826 hours and 02 minutes. Thus, Azure DevOps experienced some kind of disruption for about 103 working days, including an outage that took place in Brazil and the United States.

Let’s not forget that Azure DevOps is a secure platform and Microsoft takes all the necessary measures to make its services available. It patches vulnerabilities and provides updates to Azure DevOps users to know how to deal with them. Here we shouldn’t forget about the Shared Responsibility Model that Microsoft follows. Within it, Microsoft is responsible for its services staying available while users should take care of their data and its security, availability, and recoverability.

To have peace of mind that their source code and project data are secured, Azure DevOps users should implement security best practices, which include MFA, monitoring, backup, etc.

Moreover, in view of meeting not only security but also compliance requirements, Azure DevOps users should consider backing up their DevOps and PM’s environment. GitProtect backup and DR software for Azure DevOps helps admins ensure that their company’s critical data is accessible and recoverable in any event of disruption. The solution helps organizations to follow backup best practices that leave no place for data loss.

[FREE TRIAL] Ensure compliant Azure DevOps backup and recovery with a 14-day trial🚀