Bitbucket Backup to S3

Companies utilizing Bitbucket or other solutions as the primary Git-based source control must repeat a particular truism ad nauseam. Preventing data loss is vital to maintaining business continuity. One of the most crucial aspects in dealing with such a challenge is ensuring data protection in S3-compatible cloud storage.

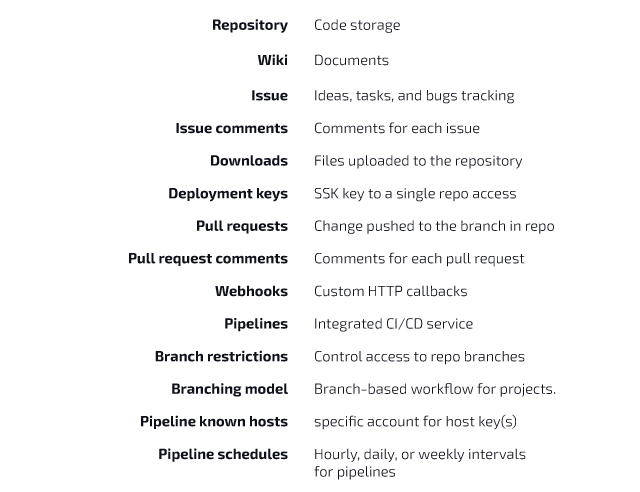

More so, as saving the existing repository alone is not enough. It’s crucial the backup plan includes metadata, like pull requests (Git command), history (e.g., comments), and other:

S3 for Bitbucket repositories

Bitbucket Cloud services don’t inherently offer native backup and restore features, so users must create or implement their own solutions (or tools).

We do not use these backups to revert customer-initiated destructive changes, such as fields overwritten using scripts, or deleted issues, projects, or sites. To avoid data loss, we recommend making regular backups.

Source: Atlassian security practices under the Shared Responsibility Model.

A similar problem also affects other Git-based platforms, such as GitLab or GitHub.

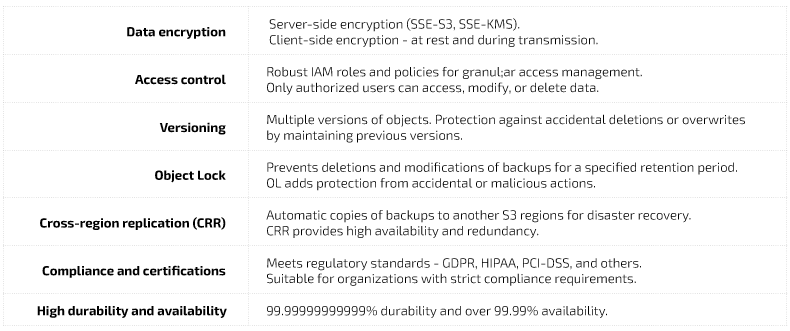

Given the distributed nature of cloud repositories and the need for quick recovery in the case of information loss, S3s (Simple Storage Services) – at the moment – create the best possibilities when it comes to:

Example methods of backing up Bitbucket repository data to S3

You can list several ways to store Bitbuckets repos in S3, from manual to fully automated methods. It all depends on your scalability and control needs. The most effective approaches include:

Manual backup using AWS CLI

The backup of your Bitbucket instance can be created using the bitbucket.diy-backup.sh. You can manually upload it to an S3 bucket with AWS CLI.

To generate a backup, all it takes is to run the command:

./bitbucket.diy-backup.shThen, you can upload the data to S3.

aws s3 cp /path/to/backup.tar.gz s3://bucket-name/The solution is suitable for small setups or occasional backups with no priority on automating activities. However, it lacks scheduling and is prone to human errors.

A more broad example of using Git command and AWS CLI:

#!/bin/bash

# Configure AWS CLI access

export AWS_ACCESS_KEY_ID=your-access-key

export AWS_SECRET_ACCESS_KEY=your-secret-key

export S3_BUCKET=your-s3-bucket

# Clone the Bitbucket repository

git clone https://bitbucket.org/your-org/your-repo.gisdt /tmp/your-repo

# Sync the cloned repository with the S3 bucket

aws s3 sync /tmp/your-repo s3://$S3_BUCKET/backups/ --deleteAutomated backup with Cron Job and AWS CLI

The method allows you to automate backup and upload utilizing cron jobs on Linux or macOS operating systems or Task Scheduler on Windows.

It starts with the shell script:

#!/bin/bash/path/to/bitbucket.diy-backup.sh

aws s3 cp /path/to/backup.tar.gz s3://bucket-name/It can be scheduled to run the script regularly (e.g., daily) using cron:

crontab -e 0 2 * /path/to/backup-script.shSuch an approach is best for teams looking for a fully automated process without a complex setup.

S3 sync for multiple backups

The AWS s3 sync command helps upload all directories to S3 storage. It guarantees that the Bitbucket backups are synchronized without uploading data individually.

aws s3 sync /path/to/backup-directory/ s3://bucket-name/All you have to do is sync the target folder to S3:

aws s3 sync /path/to/backup-directory/ s3://bucket-name/It’s a good way to avoid redundant uploads, providing automatic syncs only for new or modified data. Yet, careful storage management is required to avoid high costs.

CI/CD pipeline integration

Backing up Bitbucket repos can be integrated into a CI/CD pipeline using Jenkins or Bitbucket Pipelines. It provides more control and flexibility over the schedule and upload process.

You can create a Jenkins job or Bitbucket Pipeline to run the Bitbucket backup script and use aws s3 cp to upload the backup to an S3 bucket.

Schedule it to run nightly or based on events, automating backup management and providing centralized lead with logging, but requiring setup and maintenance.

pipelines:

default:

- step:

name: Build artifact

script:

- mkdir artifact

- echo "Pipelines is awesome!" > artifact/index.html

artifacts:

- artifact/*

- step:

name: Deploy to S3

deployment: production

script:

- pipe: atlassian/aws-s3-deploy:0.3.8

variables:

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY$ AWS_SECRET_ACCESS_KEY

AWS_DEFAULT_REGION: 'us-east-1'

S3_BUCKET: 'bbci-example-s3-deploy'

LOCAL_PATH: 'artifact'

ACL: 'public-read'Source: Atlassian.

AWS SDK for programmatic backup

A custom AWS SDK script – such as a Python boto3, Java, or Node.js – might help you manage the backup and upload process in a broader fashion.

A fine example is the script generating the Bitbucket backup with bitbucket.diy-backup.sh script:

import boto3

s3 = boto3.client('s3')

s3.upload_file('/path/to/backup.tar.gz', 'bucket-name', 'backup.tar.gz')It may fit teams looking for highly customizable solutions or complex workflows. The method provides fine-grained control yet requires programming expertise.

Another code may be:

import boto3import os

s3 = boto3.client('s3',

aws_access_key_id=os.getenv('AWS_ACCESS_KEY_ID'),

aws_secret_access_key=os.getenv('AWS_SECRET_ACCESS_KEY'))

local_repo_path = '/path/to/your/local/repository'

for root, dirs, files in os.walk(local_repo_path):

for file in files:

s3.upload_file(os.path.join(root, file), 'your-s3-bucket', f'backups/{file}')

The script recursively uploads all data in the local repository to S3. Therefore, even newly created elements are part of the backup.

Of course, there are other ways to back up Bitbucket instances, like using:

- third-party backup tools

- AWS backup service for EC2 instances (Bitbucket hosted on EC2)

- AWS Lambda capabilities for event-driven backups (utilizing CloudWatch Event function).

Whether you use the Git command, Bitbucket Pipelines, or custom code for automated backups, the main goal is stored data protection, including metadata.

For more convenient and secure data management, consider using the GitProtect backup platform. It’s an all-in-one backup and disaster recovery solution for DevOps.

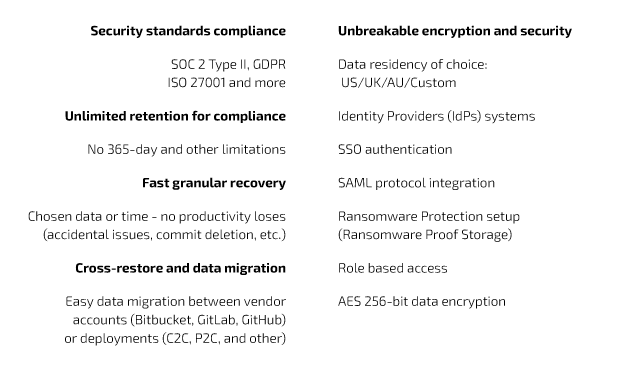

A backup solution to level up the security and convenience

Among its many advantages, it’s worth to underline (among others):

With GitProtect, you can restore repositories with metadata directly to your Bitbucket account at any time. You are also free to utilize Xopero’s unlimited data retention (for archive purposes) and data replication capabilities.

The tool provides you with advanced audit logs and visual statistics. The latter lets you track all actions and quickly spot potential errors. You are ready to perform backup on-demand to react immediately.

The platform keeps you updated with customized emails or Slack notifications (with no log-in needed).

In addition to the above, GitProtect guarantees straightforward and handy backup and restore of your repositories.

Utilizing GitProtect backup solution

The simplest and fastest way to set store Bitbucket backup to S3 is to connect your Bitbucket and AWS S3 accounts. First, you need to add the Bitbucket organization to GitProtect. Then, it’s time to prepare the proper cloud location for your data in S3 (AWS)

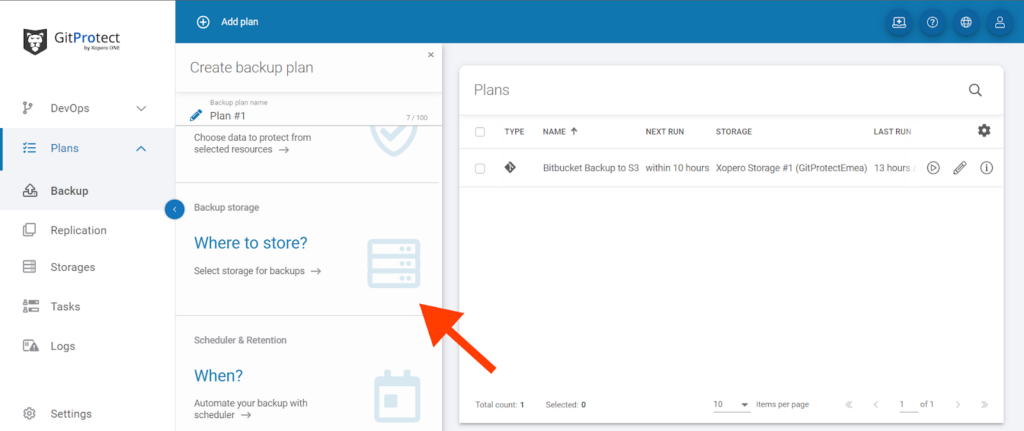

- Select the Plans tab on the right.

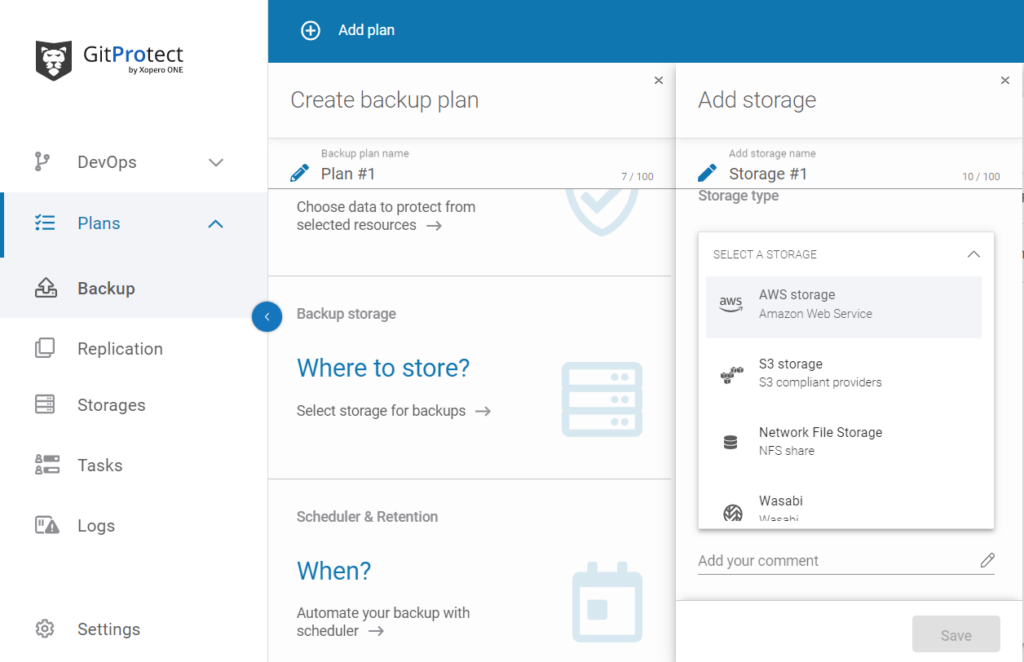

- Click Add plan and open the Where to store section. Select the data you plan to protect.

- Now, set the backup storage location to AWS S3 (or any other you prefer and have an account on). You need to configure the connection and then schedule the backups.

And that’s it! From now on, you can plan, execute, and reconfigure any setup to tailor the platform to your expectations and needs. It can be done for both DC and Cloud Bitbucket instances, depending on what service you use.

Bitbucket Cloud and Data Center (DC). What to consider?

Key technical considerations may be worth focusing on when storing backups in S3 using GitProtect between Bitbucket Cloud and Bitbucket Data Center (DC).

Backup size and data structure

- Cloud

Stores primarily source code and other data. It’s easier to backup, but subject to API rate limits. - DC

Uses large datasets like application logs, large binaries, etc. – increasing backup complexity.

Bandwidth and network

- Cloud

Backup of repositories rely on internet bandwidth, potentially slower. - DC

Internal network performances apply, but remote S3 storage requires significant bandwith for large data transfers.

Security and compliance

- Cloud

Atlassian data centers manage the security, however S3 needs proper access control. - DC

Custom security with in-house encryption and regulatory requirements (compliance).

Automation and scheduling

- Cloud

Simpler but with rate limits. - DC

Custom scheduling and resource allocation for handling large backups (repositories) is necessary.

Restore activity

- Cloud

Straightforward for repositories but needs consideration for restoring details (e.g., pipelines, comments). - DC

Robust system for restoring large datasets is a necessity.

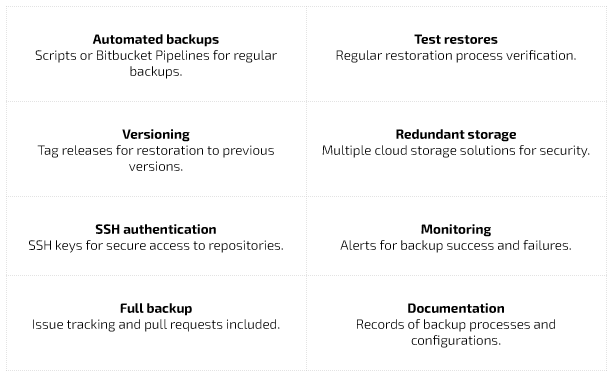

A few best practices for backing up Bitbucket repositories

There’s nothing groundbreaking in saying that securing Bitbucket repositories is not only about using the right solution (S3). It also involves good practices (e.g., versioning, SSH). It’s trivial, yet vital – no matter how experienced and technically proficient your team is.

Here are some examples:

Bitbucket repositories backup process summary

Backing up Bitbucket repositories to S3 is essential for maintaining data integrity and avoiding downtime. A reliable backup strategy covers not only code storage but also the associated details such as pull requests, issue tracking, and pipelines.

That ensures all critical components of your stored repository are secure and you can access and quickly restore when needed. That goes for both cloud and data center Bitbucket data repos.

As the all-in-one backup platform, GitProtect streamlines the Bitbucket repositories backup by automating it and allowing flexible configurations. That includes full, incremental, or differential backups. The tool provides technical teams with:

- better control over

- data protection

- data replication

- support for 3-2-1 (3-2-1-1-0) or 4-3-2 backup rules.

It minimizes the risk of information loss and supports business competitiveness.

[FREE TRIAL] Ensure compliant Bitbucket backup and recovery with a 14-day trial 🚀

[CUSTOM DEMO] Let’s talk about how backup & DR software for Bitbucket can help you mitigate the risks