Jira Data Loss Scenarios To Watch Out For (And How To Avoid)

For many DevOps and ITSM workflows, Jira is the nerve center. It’s relied upon by thousands of teams for everything from agile sprint planning to enterprise-scale incident management. However, beneath the robust interface and powerful automation, your Jira data remains fragile – far more than you think.

Scenarions around Jira data loss aren’t a theory. At least nowadays, when such things happen it’s quickly and quietly. When the data breach occurs, it can paralyze entire departments, halt development pipelines, and even erode compliance postures in no time.

Automation as a landmine

In 2024, a well-established European software consultancy fell victim to a catastrophic data loss. It was triggered by a misconfigured global Jira automation rule. One seemingly harmless logic update. It propagated across dozens of projects and inadvertently bulk-deleted thousands of historical issues.

For some reason, the admin thought the cleaning-up rule was removed (with no recovery options):

- sping records

- user stories

- security incidents logs.

Such a case wasn’t about malicious intent. It was a routine refactoring that went wrong. The scale and speed were elements that made it devastating. After all, Jira automation has no “are you sure?” dialog when you trigger a bulk action on global rules. In many configurations, it won’t even notify you before executing.

This is the first lesson: automation doesn’t discriminate between efficiency and destruction – it merely executes. And with execution comes other elements that need to be considered.

Areas to consider regarding potential data loss:

| Scope | Yes, it matters. That’s why it’s important to avoid overly broad rules that impact many projects without granular controls. |

| Safety checks | Implementing confirmation steps (or manual approval) is necessary for destructive actions, even in automation. |

| Visibility | Maintain dashboards and alerts for real-time tracking of automation executions. |

| Recovery preparedness | Robust backup strategies are a must. The same goes for quick restore mechanisms in place prior to automation deployment. |

AI automation is a growing threat

Note that due to more and more use of autonomous AI agents, losing data thanks to AI is a reality. Read the guide below to learn more:

–> AI Data Loss Risks In Jira You Can’t Ignore

AI automation is a growing threat to your data

Note that due to more and more use of autonomous AI agents, losing data thanks to AI is a reality. Read our latest guide on how to address AI-related risks of losing data in Jira.

It’s easy to assume that because Atlassian hosts Jira, data protection is a part of the service. The SLA looks comforting, and the interface feels safe. Of course, that’s a false sense of security. Atlassian operates under a strict Shared Responsibility Model (SRM).

While they guarantee uptime and basic platform availability, data integrity, backups, and recoverability are your (the customer’s) responsibility. And that distinction has costly implications. In the event of accidental deletion or corrupt configuration, Atlassian is not liable, not even when ransomware propagates through integrations.

99% of cloud security failures will be due to customer missteps by 2025.

– Gartner

If your automation rules, workflows, custom fields, or attachments are wiped, you are responsible for having a copy. This gap perception is widespread. Restoration in Jira is limited to narrow windows of 30 days. In some cases, it’s only for specific items and partial.

The key here is to understand what the Jira Cloud user can do and should do.

As was mentioned above, the first thing is grasping the shared responsibility boundary. The line here is straightforward: Atlassian secures the platform while the user cares and protects data. It’s as “simple” as that.

Platform-level outages and vendor-induced deletions

The 2022 Atlassian outage serves as a lesson learned and a clear case study. The problem started with a scripted cleanup of deprecated apps. It was triggered from Atlassian’s internal orchestration layer. Then, the thing cascaded into the deletion of the entire Jira Cloud customer environment(s).

Impacted sites were removed at the database object level, taking out (in a single operation):

- issues

- boards

- workflows

- automation rules

- attachments.

The core trouble is the fact that in Jira Cloud, tenant data is logically separated within Atlassian’s multitenant databases. A destructive operation run in the wrong scope can wipe out an entire tenant without touching others. Restoration then depends entirely on Atlassian’s internal backup cadence and tooling, which is not exposed to customers.

And operational impact? Here’s a quick list, just to name a few:

| Total loss of access during the outage window (days to weeks). |

| No customer-initiated rollback. |

| Delays to sprint completions and blocked releases. |

| In a regulated context, a potential breach of contractual obligations or audit evidence requirements. |

Avoiding such an event requires a parallel backup architecture, external to Atlassian’s environment. It should be capable of full instance replication, including:

- all project and issue data (issues, comments, attachments)

- configuration objects (schemes, workflows, custom fields)

- automation rules and integration settings.

Of course, replication should occur on a schedule meeting the company’s RPO (Recovery Point Objective) for many teams. That means hourly or even near-real-time for high-value projects.

Human error with high-privilege accounts

Human error is one of the things that fuel innovation, science, technology, as well as almost all aspects of people’s activities.

Probability of human error is considerably higher than that of machine error.

– Kenneth Appel (mathematician, co-solved the four-color theorem)

Jira allows global administrators to perform high-impact actions with minimal guardrails. That includes bulk issue deletions, scheme changes, and field removals. A misjudged bulk operation in the Issue Navigator using JQL has the potential to delete thousands of issues at once. Changes to field configurations can wipe values across projects instantly if the field is shared.

For instance, an admin is running a cleanup query with bulk delete permissions:

project = OPS AND created < -365dHe accidentally omitted a status != Closed filter, removing active work in progress. Because issue deletions in Jira Cloud bypass any “soft delete” beyond the 60-day recycle bin, and because that bin does not store historical configurations and automations, full restoration often becomes impossible without an external backup.

All human errors are impatience, a premature breaking off of methodical

procedure, an apparent fencing-in of what is apparently an issue.

– Franz Kafka (The Collected Aphorisms, 1994)

In consequence, the admin may face

- irrecoverable loss of work items after the recycle bin expiry

- broken boards, dashboards, and filters dependent on deleted issues

- loss of historical velocity and lead time metrics used for performance reporting.

Therefore, it becomes obvious that a Jira administrator must adopt least privilege access control (LPAC) principles. That includes:

| Restricting bulk change and delete permissions to a small, vetted admin group. | Enforce change-management approval for configuration edits affecting shared schemes. | Maintain immutable, point-in-time backups for rollback. |

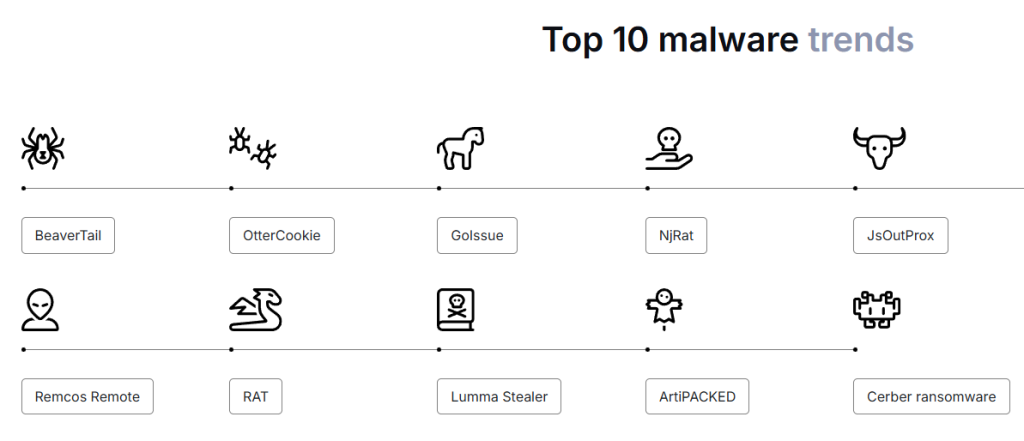

Ransomware and malicious API integrations

While Jira itself is cloud-hosted, its integrations form a broad attack surface. Both OAuth-scoped apps and webhooks can be exploited to modify or delete data if compromised. Consider a malicious integration that issues API calls to bulk-delete issues, strip attachments, or overwrite comments.

If these actions are distributed over days, they can bypass anomaly detection and blend with normal activity. A sophisticated ransomware operator may also target linked Confluence spaces, source repositories, or asset databases, compounding the damage.

Look at the example of an API’s endpoints below:

DELETE /rest/api/3/issue/{issueIdOrKey}

PUT /rest/api/3/issue/{issueIdOrKey}Both demand authentication but no user confirmation, making stolen tokens a critical risk. The latter may entail:

- coordinated removal or corruption of records across multiple projects

- silent compliance data loss until discovered during an audit

- extended recovery times if backup snapshots are also compromised.

Prevention measures against ransomware and malicious API integrations include segregating API credentials. That means never reusing them between automation and third-party apps.

Another element is storing backups in an environment completely isolated from production Atlassian Cloud. At the same time, implementing immutable storage and MFA on backup restore functions will be a smart and strategic move.

Configuration corruption and workflow drift

In Jira, a single configuration object can serve (and often serves) dozens of projects. A workflow scheme edit intended for one project can alter transitions, resolutions, and validators globally. A field context change can hide or wipe values in other projects without warning.

Such a “drift” in workflow often accumulates over time resulting in the following issues:

| New statuses are added without updating board column mappings. |

| Custom fields get renamed or deleted without reviewing dependent automations. |

| Permission schemes get edited in ways that allow unintended access or deletion rights. |

Once values are overwritten at the database level, native Jira tools cannot restore them. Considering the impact, it’s worth noting that the disruption to the automation rule is dependent on specific statuses of fields. The admin can expect renaming or deleting custom fields without reviewing dependent automation. Going further, SLA metrics will be broken due to missing or reset date fields.

For proper Jira administration, it points out the need to regularly export and version control all configuration schemes. They should be paired with scheduled full-instance backups that capture not just issues, but the entire configuration layer.

Migration and consolidation failures

The process of migration from Jira Data Center to Cloud (or merging multiple Cloud sites) involves schema transformations. Attachments may fail to upload due to size limits. At the same time, issue history can be truncated if the migration tool fails to map legacy workflow states to current ones.

Atlassian’s Cloud Migration Assistant doesn’t capture all needed configuration elements, like automation rules and some third-party app data. Without pre-migration backups, these elements are permanently lost.

That means partial project imports are missing attachments or history. Lost status mappings cause broken reporting. Finally, duplicated or orphaned issues require manual reconciliation.

At the source of every error which is blamed on the computer, you’ll find at least

two human errors, one of which is the error of blaming it on the computer.

– Tom Gilb (systems engineer, evolutionary software dev processes)

In turn, to avoid problems, Jira admins need to run full dress-rehearsal migrations into staging environments. Also, using differential comparisons between the source and destination to verify data integrity before production cutover will be helpful.

Compliance failures and legal exposure

In many regulated industries, missing Jira data isn’t just an operational disaster or troublesome event. Their case means a violation of legal obligations and thus financial, trust, and image losses. A deleted change request for a medical device component can breach FDA audit requirements. Also, a missing incident ticket for a financial transaction can violate PCI DSS record retention.

When native recovery windows expire, the organization cannot produce mandated records. It leads to penalties or failed audits. Of course, the company can’t forget about:

- regulatory fines or certification loss

- breach of contractual SLAs with customers

- loss of trust with partners and clients.

Such challenges force Jira admins to design backup and retention policies to exceed the longest applicable regulatory requirement – e.g., often seven years or more. Store backups in compliance-certified environments with encryption at rest and in transit.

The best backup and disaster recovery solution to avoid losing data in Jira

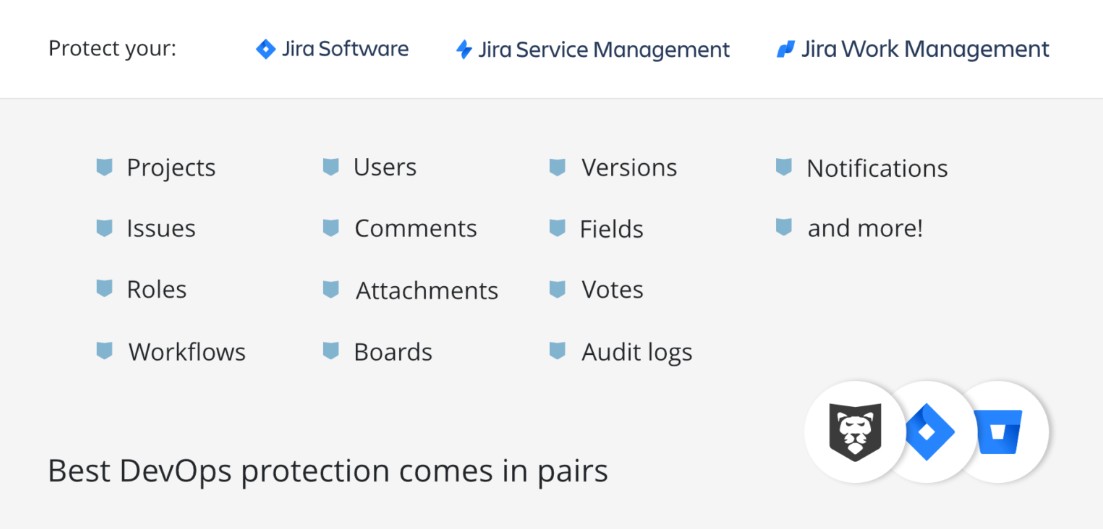

One of the best ways to address (potential) failure scenarios described above is to involve GitProtect – Backup and Disaster Recovery system. It provides a full logical backup of the Jira Cloud platform, capturing both issue-level data and configuration state. The scope of Jira data protection includes:

- issues

- custom fields

- comments

- permission schemes

- attachments

- automation rules

- workflow schemes

- integration settings

Backups can be scheduled to run multiple times per day. They can also be triggered manually. Data is stored in an immutable, versioned form. It allows recovery to any specific point in time. Depending on the incident, the recovery operations can be full-instance or granular, restoring:

- a single project

- issue

- configuration element.

Storage targets include GitProtect’s managed cloud or customer-controlled locations such as AWS S3, Azure Blob Storage, Google Cloud Storage (S3-like), or on-premise systems (NAS). In turn, Jira admins can benefit from off-platform retention for ransomware isolation and compliance with geographic data residency requirements.

All backup and restore operations are logged for auditing. Role-based permission controls access to these operations. The system protects data utilizing TLS 1.2+ (in transit) and AES-256 (at rest) encryption.

GitProtect’s key advantages at a glance:

| Unlimited retention and ransomware protection | Safeguard data with customizable policies and advanced security measures for every scenario. |

| Deployment versatility | Available as SaaS, On-premise, or Hybrid, with data residency options to meet compliance needs. |

| Disaster recovery excellence | Granular restore options and cross-platform recovery (P2C, C2C, C2P) ensure business continuity. |

| Multi-storage technology | Bring your own S3, on-premise, or hybrid storage while enjoying free unlimited storage in all plans. |

| Advanced backup policies | Tailor schedules, backup types, and retention with state-of-the-art technologies like GFS and Forever Incremental. |

| Top-tier security | SOC 2 Type II and ISO 27001 certifications, AES encryption, Zero Trust Architecture, and ransomware defense. |

These capabilities enable organizations to:

- recover after bulk deletions

- avoid vendor-induced environment wipe

- recover automation logic lost in migrations

- maintain compliance evidence

- meet aggressive RTO/RPO requirements

- keep long-term retention requirements beyond native limits.

All the above can be done without dependence on Atlassian’s internal recovery processes. In other words, admins can resolve problems without waiting for Atlassian support or risking incomplete manual reconstructions.

It’s even more important considering native Jira Cloud retention and export functions don’t provide full-instance recovery, configuration capture, or long-term retention beyond Atlassian’s 60-day window.

Data protection as an architectural requirement

In Jira, “protection” only counts when it’s designed for explicit RPO/RPO targets, not vague comfort. It’s about treating projects by criticality, mapping each to a snapshot cadence that actually meets the RPO, and verifying if you can recreate Shh both issues and configuration state (workflows, fields, schemes, automations) within the promised RTO.

If one restore step depends on Atlassian support, you don’t control your outcome.

Architecture, not hope, prevents silent features. Jira admins should keep backups off-platform and immutable, with IAM, keys, and networks isolated from production. The key is to version configuration snapshots so admins can roll back drift without touching live data. Next comes running restore drills on a schedule with synthetic validation:

- rebuilding a target project in a sandbox

- replaying automation

- confirming board mappings and SLAs

- comparing item counts and checksums

- recording the measured restore time and deviations.

Such a report is the evidence when an auditor (or a customer) asks whether the admin can actually recover. To any Jira admin – you don’t own your Jira data because you can export it. You own it when you can restore it on demand within SLA. It’s even if the vendor is down, an admin made a bad bulk change, or an API token went rogue.

That’s what “architectural requirement” means here. Think of resilience by design, proven in practice – just like GitProtect.